Look, I’m going to level with you. Your LLM AI model is probably underperforming right now. And before you blame the architecture, the hyperparameters, or your data science team—let me tell you the real culprit: your training data is garbage.

I know that’s harsh. But here’s the thing: 80% of LLM AI project time gets burned on data preparation, and most teams are still treating data labeling like it’s some janitorial task they can outsource to the cheapest bidder. Then they act shocked when their $2 million LLM AI initiative crashes and burns after the proof-of-concept phase.

The uncomfortable truth? Poor data quality costs the U.S. economy $3.1 trillion annually according to IBM. That’s not a typo. Trillion. With a T.

But here’s the good news: fixing your data labeling process is probably the highest-leverage thing you can do right now to improve your AI outcomes. And unlike throwing more GPUs at the problem, it doesn’t require a blank check from your CFO.

In this guide, I’m going to show you exactly how to build a data labeling operation that actually works—whether you’re a startup founder trying to validate your first ML model or a VP of Engineering managing enterprise-scale AI deployments.

We’ll cover:

- Why most AI projects fail (hint: it’s the data, not the code)

- The real economics of data labeling (spoiler: cheap isn’t cheap)

- Practical frameworks for building quality datasets at scale

- When to use humans vs. machines vs. synthetic data

- How to choose the right tools without getting fleeced by vendors

No fluff. No “in the world of AI” throat-clearing. Just the battle-tested strategies we use at Iterators to help our clients build production-grade AI systems that don’t fall apart the moment they hit real users.

Ready? Let’s fix your data.

Want expert help now? Work with us at Iterators—schedule a free consultation.

The LLM AI Paradigm Shift Nobody Told You Abou

For the past decade, the AI industry has been obsessed with models. Deeper networks. Novel architectures. Clever algorithms. The assumption was simple: if we just build a better mousetrap, the data will sort itself out.

That assumption is now officially dead.

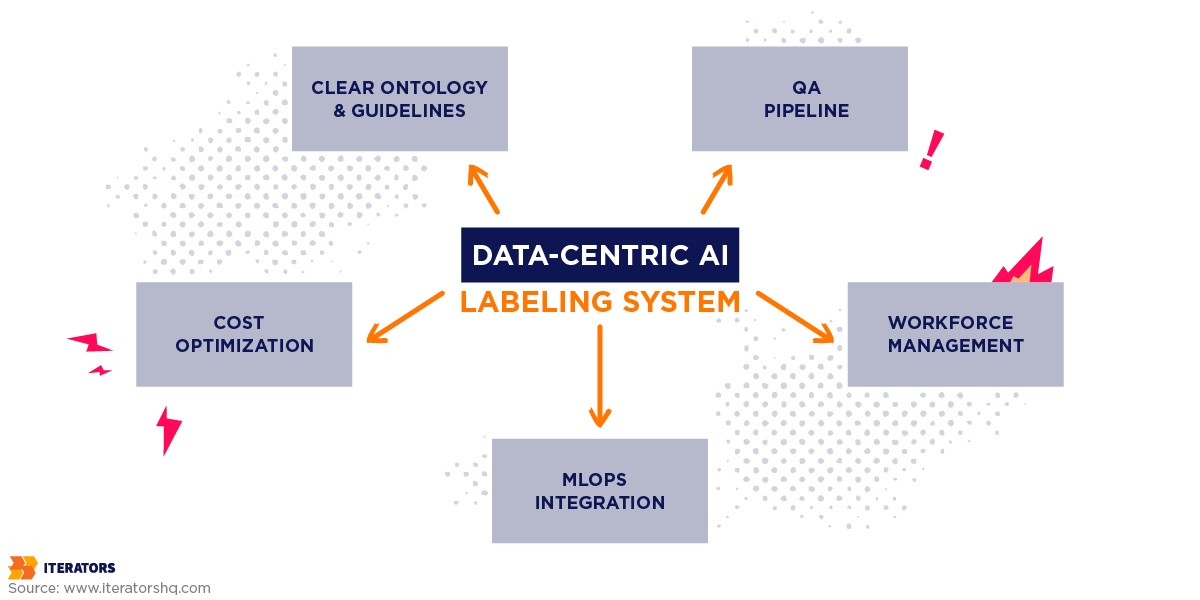

Welcome to the era of Data-Centric LLM AI—a term Andrew Ng has been championing because he’s watched too many teams waste millions chasing marginal gains from model tweaks while sitting on fundamentally broken datasets.

Here’s what changed: models became commodities. You can pull down a pre-trained transformer from Hugging Face in thirty seconds. GPT-4 is available via API. The marginal cost of model sophistication has collapsed to near-zero.

But high-quality, domain-specific, properly labeled training data? That’s still hard. That’s still expensive. That’s still your competitive moat.

The $3.1 Trillion Problem

When I say “poor data quality,” I’m not talking about missing a few labels or having some noisy annotations. I’m talking about systematic failures that ripple through your entire organization:

Operational Inefficiency: Your data scientists—the ones making $200K+ salaries—spend 80% of their time cleaning data instead of building models. You’re essentially paying Formula 1 engineers to pave the track.

Model Hallucinations: In Generative LLM AI, garbage training data produces “confident nonsense.” Your LLM will hallucinate with perfect grammar and absolute certainty because it learned from contradictory, biased, or just plain wrong information.

Regulatory Risk: Mislabeled data in healthcare or finance doesn’t just hurt performance—it triggers GDPR fines, HIPAA violations, and lawsuits. In regulated industries, bad labels are legal liability.

Project Abandonment: Gartner predicts 60% of LLM AI projects will be abandoned by 2026 specifically due to poor data quality. Not bad algorithms. Not insufficient compute. Bad data.

The companies that figure out data labeling will ship LLM AI products that work. The ones that don’t will keep burning cash on proof-of-concepts that never make it to production.

What Actually Is LLM AI Data Labeling (And Why Should You Care?)

Data labeling is the process of establishing ground truth—the objective reality your AI model is trying to learn.

In supervised learning (which is still the workhorse for most production LLM AI systems), the label is the specification.To understand the broader context of how different AI approaches work together, read our guide on machine learning vs generative AI differences and use cases. It’s the instruction manual. If your labels are wrong, inconsistent, or ambiguous, your model learns a flawed specification.

Think of it this way: you can’t code a correct system if the requirements are contradictory. The same principle applies to AI—except instead of writing requirements in English, you’re writing them in labeled data.

The Many Flavors of LLM AI Data Labeling

Modern LLM AI systems require different labeling approaches depending on what you’re building:

Computer Vision

- Image Classification: “This is a cat” (simple)

- Object Detection: Drawing bounding boxes around every cat in the image

- Semantic Segmentation: Labeling every pixel that belongs to a cat

- 3D Cuboids: Reconstructing volumetric representations for autonomous driving

- Video Object Tracking: Maintaining identity across temporal sequences

Natural Language Processing

- Sentiment Analysis: Positive/negative/neutral

- Entity Recognition: Identifying people, places, organizations in text

- Relationship Extraction: Understanding how entities relate to each other

- Response Ranking: For LLM alignment—deciding which answer is “better”

Generative LLM AI Alignment

- Preference Pairs: Ranking model outputs for RLHF (Reinforcement Learning from Human Feedback)

- Constitutional LLM AI: Evaluating outputs:against ethical guidelines

- Safety Classification: Identifying harmful or biased content

The complexity scales fast. A simple “cat vs. dog” classifier needs basic labels. An autonomous vehicle needs 3D spatial annotations across multiple sensor inputs, updated in real-time, with sub-centimeter precision.

Ground Truth Is Engineered, Not Found

Here’s what most people get wrong: they treat ground truth like it’s some objective fact waiting to be discovered. But in reality, ground truth is a social construct you engineer through:

- Strict Ontologies: Defining exactly what each label means

- Annotator Training: Teaching humans (or machines) to apply labels consistently

- Quality Assurance Loops: Catching and correcting errors

- Iterative Refinement: Improving the dataset based on model performance

This is why data labeling is an engineering discipline, not a data entry job.

The Economics of LLM AI Data Labeling (Or: Why Cheap Is Expensive)

Let’s talk money.

The global LLM AI data labeling market is exploding—from about $4 billion in 2024 to a projected $20 billion by 2030 according to Grand View Research. Some analysts think it could hit $130 billion by the early 2030s.

Why the growth? Because every company trying to build LLM AI is discovering the same painful truth: you can’t skip the data work.

The Hidden Costs of “Cheap” Labeling

I see this mistake constantly: teams optimize for the lowest cost-per-label without thinking about Total Cost of Ownership.

Here’s what that “cheap” labeling actually costs you:

Rework and Correction If 18% of your labels are wrong (a common benchmark for low-quality crowdsourcing), the cost of finding and fixing those errors often exceeds the initial labeling cost. Bad labels are technical debt—they charge you interest in the form of debugging time.

Model Performance Ceiling Models trained on noisy data hit a performance wall that no amount of hyperparameter tuning can break. The opportunity cost here is a sub-optimal LLM AI product that fails to meet user needs.

Management Overhead Managing distributed crowdsourced workers requires significant internal project management and QA resources. These costs are almost always underestimated in initial budgets.

Compliance Failures In regulated sectors, the “cost” of bad labeling includes regulatory fines and legal liability. A single lawsuit can dwarf your entire data budget.

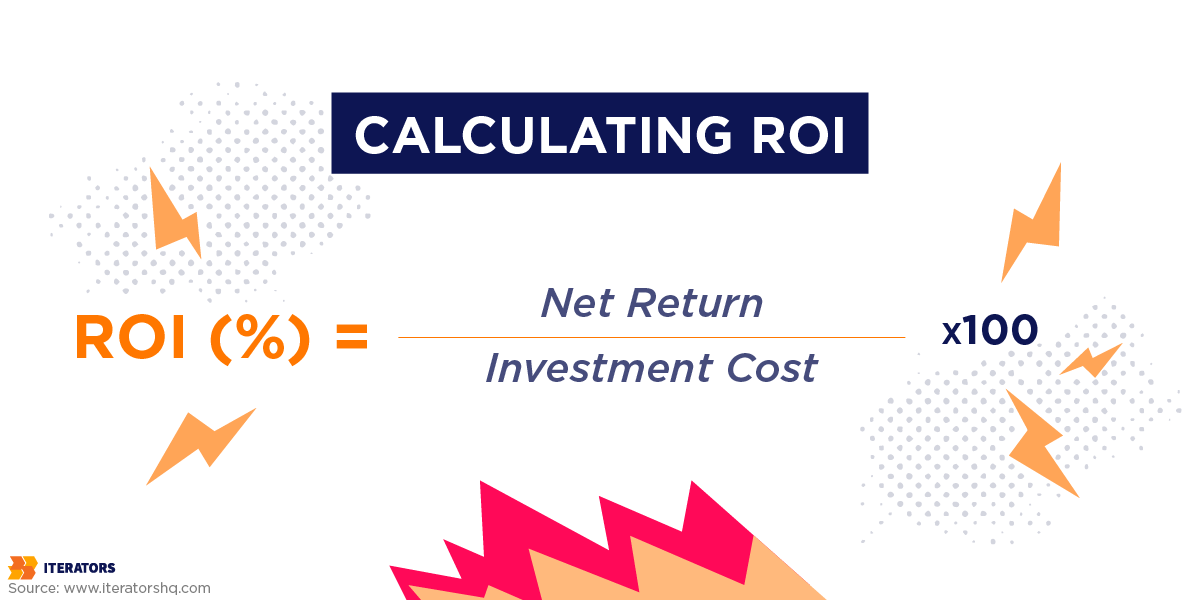

The ROI of Quality Data

On the flip side, high-quality data delivers compounding returns:

Data Efficiency: Clean labels let you hit target performance with fewer training examples. Andrew Ng’s LLM AI research shows that cleaning labels in a small dataset often beats tripling the dataset size with noisy data. This reduces compute costs and speeds up experimentation.

Faster Iteration: Well-structured data pipelines enable rapid experimentation cycles. You can test hypotheses, validate improvements, and deploy updates faster—creating a compounding advantage in product velocity.

Risk Mitigation: In healthcare, finance, or autonomous LLM AI systems, the ROI of accurate labeling includes avoiding catastrophic failures. The cost of a single safety incident can exceed your entire AI budget.

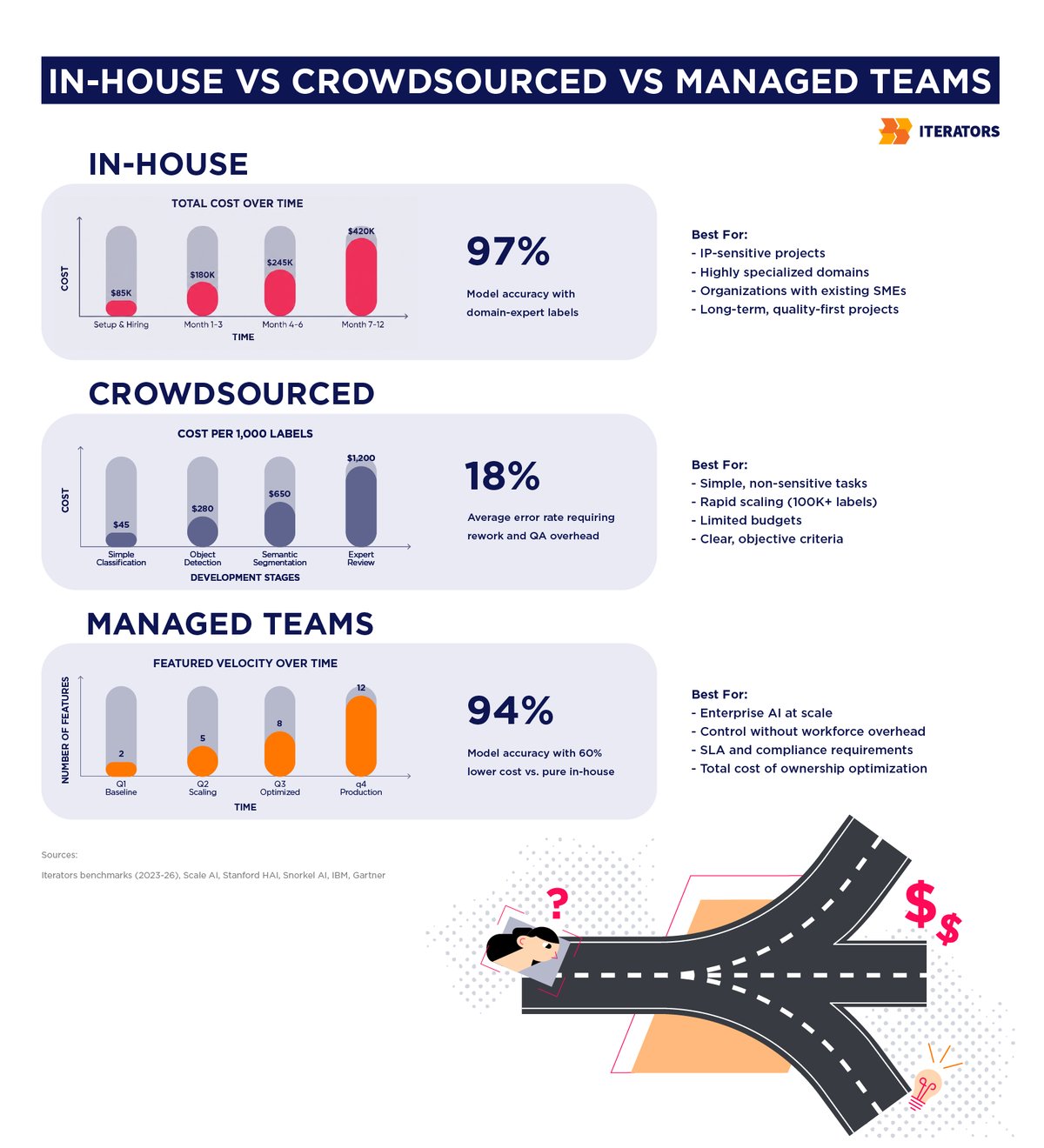

LLM AI Build vs. Buy: The Strategic Decision

You have three basic options:

| Approach | Cost Profile | Quality Control | Best For |

|---|---|---|---|

| In-House Manual | High (fixed + variable) | High (direct oversight) | IP-sensitive, highly specialized domains |

| Crowdsourcing | Low (variable) | Variable (requires strict QA) | Simple, non-sensitive tasks |

| Managed Teams | Medium-High | High (SLA-driven) | Enterprise applications requiring consistent quality |

| Programmatic | Low (setup cost) | Consistent (rule-based) | Large-scale text classification |

| Synthetic | Low (compute) | High (controlled) | Edge cases, privacy-preserving scenarios |

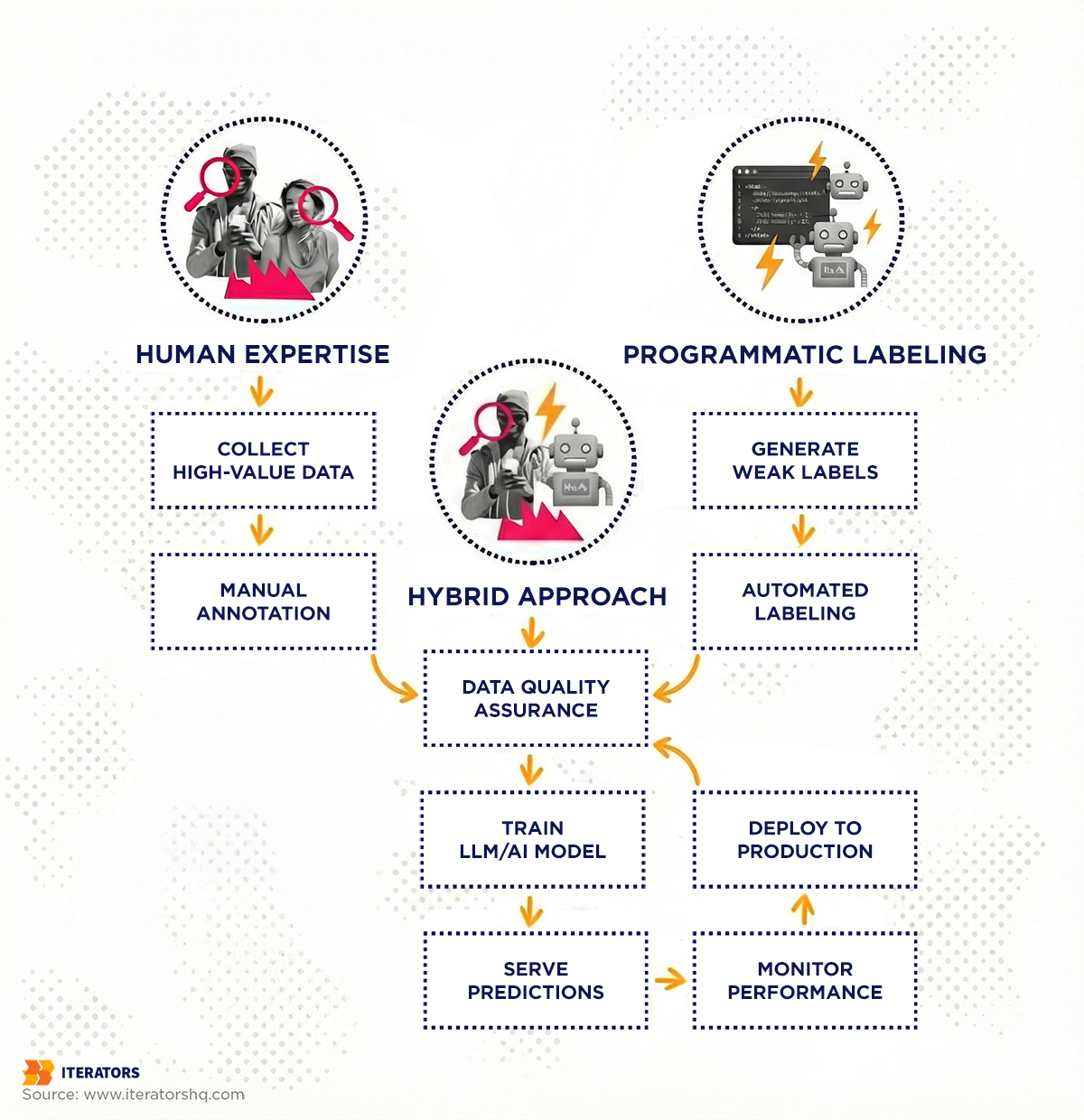

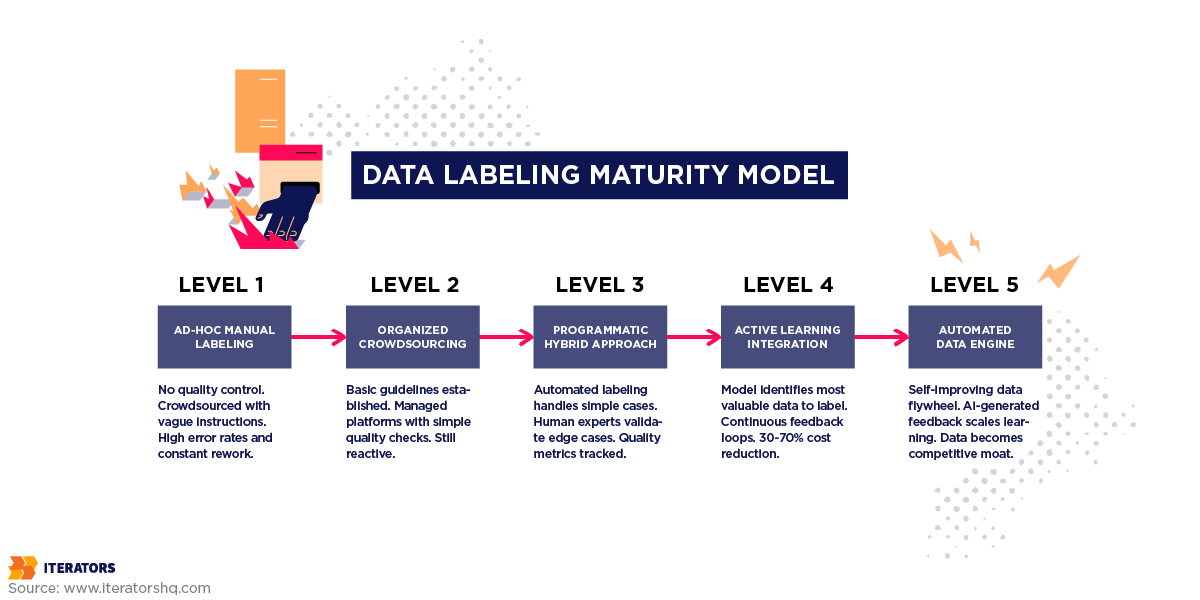

Most successful LLM AI teams use a hybrid approach: programmatic labeling for the easy 80%, active learning to identify the hard 20%, and human experts for the critical edge cases.

How to Actually Label LLM AI Data (The Methods That Work)

Let’s get tactical. Here are the five core approaches to data labeling, when to use each, and how to implement them without losing your mind.

1. Manual Labeling: The Bedrock of Nuance

Despite all the hype around LLM AI automation, human labeling remains foundational—especially for establishing your initial “Golden Set” of ground truth.

When to Use Manual Labeling

- Complex, nuanced tasks requiring expert judgment

- Establishing ground truth for training automated systems

- Domains where context and edge cases matter (medical diagnosis, legal analysis)

- Initial dataset creation for new problem domains

How to Do It Right

Workforce Management Distinguish between “simple” tasks suitable for crowdsourcing and “expert” tasks requiring subject matter experts (SMEs). You can’t crowdsource the diagnosis of a rare disease from an MRI scan—that requires a board-certified radiologist.

Optimize Cognitive Load Labeling is mentally taxing. Platforms that reduce clicks and eye movement can improve efficiency by up to 30%. Design your labeling interface to minimize friction.

Measure Consensus Since humans are subjective, “truth” is often statistical. Use Inter-Annotator Agreement (IAA) metrics like Cohen’s Kappa or Krippendorff’s Alpha to quantify reliability. Low IAA scores mean your instructions are ambiguous or the task is inherently subjective.

Common Mistake: Treating all labelers as interchangeable. In reality, you need different tiers: crowd workers for simple tasks, trained annotators for standard work, and SMEs for complex edge cases.

2. Programmatic Labeling: Write Functions, Not Labels

Programmatic LLM AI labeling represents a paradigm shift: instead of labeling individual data points, you write functions that label data at scale.

The Snorkel Paradigm

Instead of manually tagging 10,000 emails as “Spam” or “Not Spam,” you write labeling functions like:

IF “urgent” IN subject AND “click here” IN body THEN label = SPAM

IF sender_domain IN known_spam_domains THEN label = SPAM

IF email_has_attachment AND subject_contains(“invoice”) THEN label = NOT_SPAM

Frameworks like Snorkel AI let you write multiple ‘noisy’ labeling functions based on heuristics, pattern matching, or external knowledge bases. The system uses a generative model to “denoise” these inputs, learning the correlations and accuracies of different functions to produce probabilistic “weak labels.”

Advantages

- Scalability: Once functions are written, labeling a million data points costs the same as labeling a thousand

- Agility: When definitions change, update the function and re-run—no need to re-label the entire dataset

- Transparency: The labeling logic is explicit and auditable

Limitations

- Struggles with nuance and high-precision requirements

- Typically serves as a starting point (weak supervision) refined by human review

- Requires domain expertise to write good labeling functions

Pro Tip: Use programmatic labeling to create a baseline model quickly, then use that model’s predictions to identify edge cases for human review.

3. Active Learning: Mathematical Efficiency

LLM AI Active Learning challenges the assumption that all data is equally valuable. It answers: “Of the 10 million unlabeled images I have, which 1,000 should I pay humans to label to get maximum performance improvement?”

Core Strategies

Uncertainty Sampling: Query instances where the model’s prediction confidence is lowest. If your model is 50/50 on whether an image is a cat or dog, that image is highly informative.

Diversity Sampling: Select instances dissimilar to what the model has already seen, ensuring coverage of the entire data distribution.

Expected Model Change: Select instances that, if labeled, would cause the greatest change in model parameters.

Impact on Cost

LLM AI active learning can reduce labeling costs by 30-70% while maintaining or improving model accuracy. Studies show it’s not about how much you label—it’s about what you label.

Implementation Strategy

- Train initial model on small labeled dataset

- Use model to score unlabeled data for informativeness

- Send high-value examples to human labelers

- Retrain model with new labels

- Repeat until performance plateaus

Common Mistake: Waiting until you have a “complete” dataset before training. Start with a small labeled set, train a baseline model, and use active learning to guide subsequent labeling efforts.

4. Synthetic Data: Manufacturing Ground Truth

Synthetic LLM AI data is artificially generated information that mimics the statistical properties of real-world data. It’s becoming essential for edge cases, privacy-sensitive domains, and data-starved applications.

When Synthetic Data Shines

Cold Start Problems: For new problems where real data is scarce (rare accident scenarios for autonomous vehicles), synthetic data bootstraps model training.

Privacy Preservation: In healthcare and finance, synthetic data allows sharing datasets that statistically resemble real customer data without exposing PII.

Edge Case Coverage: Generate rare scenarios that would take years to collect naturally (e.g., extreme weather conditions for autonomous driving).

Performance Benchmarks

Recent studies show synthetic LLM AI data generation is 50x faster than human labeling, but models trained purely on synthetic data may lag in accuracy by up to 35% for highly context-sensitive tasks.

The sweet spot? Hybrid approaches combining synthetic data for breadth and human-labeled data for depth show ~23% performance improvements while reducing overall costs by over 60%.

The Model Collapse Problem

There’s a risk with synthetic data: models trained exclusively on synthetic data can drift away from reality, amplifying the artifacts and hallucinations of the generator. Always validate synthetic data against real-world examples.

5. RLHF and RLAIF: Aligning LLM AI

The alignment of LLM AI models is the frontier of data labeling. This involves complex feedback loops that go beyond simple classification.

RLHF (Reinforcement Learning from Human Feedback)

RLHF has become the industry standard for aligning LLM AI models like ChatGPT and Claude. Humans don’t just “label”—they rank. They’re presented with two model responses and asked which is better based on criteria like helpfulness and safety.

These rankings train a “Reward Model,” which then guides the LLM’s reinforcement learning process. This captures nuanced human preferences that are hard to codify explicitly.

RLAIF (AI Feedback): The Scalability Solution

RLHF is slow, expensive, and hard to scale. RLAIF replaces the human ranker with a highly capable AI model (like GPT-4) acting as the judge.

RLAIF is significantly faster and cheaper. While human feedback is necessary for the highest-quality “gold standard” alignment, AI feedback scales effectively for broad preference learning.

Strategic Insight: Use RLHF to establish your reward model and preference guidelines, then use RLAIF to scale feedback collection across your entire dataset.

Industry-Specific Strategies (What Actually Works)

Data labeling strategies vary dramatically across industries. Here’s what we’ve learned helping clients in different sectors.

Automotive: Tesla’s Fleet Learning Masterclass

Tesla represents the pinnacle of industrial-scale LLM AI data operations. Learn more about Tesla’s approach in their AI Day presentations. Their approach to Full Self-Driving training eschews traditional lidar maps in favor of a vision-only system powered by massive fleet data.

The Data Engine

Tesla’s fleet actively hunts for edge cases. If the model struggles with a specific scenario (e.g., a particular type of construction cone), engineers query the fleet to upload video clips matching that visual signature. This is massive-scale Active Learning.

Cars run new models in “Shadow Mode”—making predictions without controlling the vehicle—and compare predictions to human driver actions. Discrepancies are flagged as high-value training examples.

Auto-Labeling Pipeline

Labeling millions of miles of video manually is impossible. Tesla developed auto-labeling infrastructure that reconstructs 3D scenes from 2D video clips using the vehicle’s odometry and multiple camera angles.

Because the car is moving, the system uses scene geometry (structure from motion) to automatically label static objects like curbs and lanes with sub-centimeter precision. This “4D” labeling (3D space + Time) creates datasets far superior to human 2D drawing.

The Moat: Tesla turned their customer base into a distributed data ingestion and validation workforce, creating a data moat that’s arguably insurmountable by competitors relying on smaller test fleets.

Healthcare: High-Stakes Compliance

In healthcare, the cost of error is patient safety. Data labeling is constrained by HIPAA/GDPR regulations and the scarcity of qualified annotators.

Pathology and Multi-Instance Learning

Companies like PathAI leverage LLM AI for pathology slide analysis. The challenge: “labelers” must be board-certified pathologists whose time is incredibly expensive.

Solution: Multi-Instance Learning (MIL). Instead of asking pathologists to outline every cancer cell (impossible), they label the whole slide as “Cancerous.” The model learns to identify specific regions (patches) that correlate with that label.

Virtual Staining

Use LLM AI to predict how a tissue sample would look with different chemical stains, essentially generating synthetic data to augment the pathologist’s view without the cost of physical lab work.

Compliance Architecture

For HIPAA compliance, data lineage must be baked into the labeling platform.Security considerations extend beyond compliance to active threat detection—explore how generative AI is transforming cybersecurity to protect sensitive training data from emerging attack vectors. You must prove why the model made a decision based on training data—this requires end-to-end tracking from raw data through labeling to model predictions.

Fintech: Fraud Detection and Class Imbalance

Fintech LLM AI relies on identifying needles in haystacks. Fraudulent transactions are rare events (often <0.17% of transactions), making standard supervised learning difficult.

Synthetic Upsampling

With fraud rates below 0.17%, models trained on raw data bias heavily toward “non-fraud.” Fintechs use synthetic data generation (SMOTE, GANs) to upsample fraudulent transactions, creating balanced datasets without waiting for real crimes to occur.

Network Effects in Labeling

Mastercard’s “Consumer Fraud Risk” solution demonstrates the power of network effects: confirmed fraud in one bank labels that account/device across the entire network. This creates a shared ground truth that individual institutions couldn’t build alone.

Self-Supervised LLM AI Anomaly Detection

Monzo uses self-supervised LLM AI embeddings of customer behavior to detect anomalies. By understanding what “normal” looks like for every user, they detect deviations without needing explicit “fraud” labels for every new attack vector.

Choosing Your Tools (Without Getting Fleeced)

The tooling landscape has bifurcated into “Platform” solutions and “Open Source” components. Here’s how to navigate it.

Commercial Platforms

Scale LLM AI: The ‘Amazon’ of labeling. Massive scale, API-driven “Data Engine,” dominant in autonomous driving and LLM AI. Best for teams who want to outsource the problem entirely and have the budget to do so.

Labelbox: Positions itself as the “Adobe” of labeling—powerful software for your own teams. Emphasizes “Data Curation” and vector-based searching of unstructured data to find edge cases. Best for enterprises wanting to retain control over labeling workforce and data workflows.

Snorkel AI: Leader in programmatic labeling. Platform designed for organizations with massive unlabeled text/log datasets who want to use weak supervision to label at scale. Best for “data-rich, label-poor” environments.

Open Source Alternatives

CVAT (Computer Vision Annotation Tool): Excellent for video and image annotation. Widely used by engineering teams building internal PoCs or specialized vision tools.

Label Studio: Versatile, multi-modal tool that can be self-hosted. Popular for flexibility and integration into custom MLOps pipelines.

Strategic Advice

For early-stage startups or highly specialized IP-heavy tasks: self-host tools like Label Studio with an internal labeling team. Balance of cost and security.

For scaling to enterprise production: especially for GenAI or autonomous systems where volume is massive, partner with platforms like Scale or Labelbox to handle workforce management and QA overhead.

LLM AI Integration with MLOps

Your LLM AI labeling tools must integrate tightly with your MLOps pipeline (MLflow, Kubeflow, Databricks).

Data Lineage: Every model version should be traceable back to the specific dataset version and annotations that trained it. Critical for debugging model regressions.

Continuous Training: The pipeline should support automated triggers. When model confidence drops in production (drift), automatically sample low-confidence data, send for labeling, and trigger re-training.

LLM AI Quality Assurance: The Metrics of Trust

In Data-Centric LLM AI, QA isn’t checking code—it’s checking labels. Quality is defined by Accuracy, Consistency, and Completeness.

Measuring Consensus

Human annotators disagree. If you ask three people to label the sentiment of a complex tweet, you might get three different answers.

Gold Sets: A subset of data labeled by trusted experts. All annotators are continuously tested against this set. If accuracy drops below threshold, they’re automatically paused or removed from the pool.

Consensus Algorithms: For high-stakes data, “ground truth” is often derived by taking majority vote of multiple annotators, or using weighted vote based on historical accuracy.

The Ontology Problem

Most labeling errors stem from instructions, not labels. Ambiguous ontologies (e.g., “Label all ‘large’ vehicles”—what counts as large?) lead to noise.

Best Practice: Iterative ontology refinement. Start with a small pilot batch, measure confusion matrices to identify frequently confused classes, and refine guidelines before scaling up.

If labels consistently confuse “truck” and “van,” the definition needs to be clearer, or the classes should be merged.

LLM AI Quality Metrics to Track

Inter-Annotator Agreement (IAA)

- Cohen’s Kappa (two annotators)

- Krippendorff’s Alpha (multiple annotators)

- Target: >0.8 for production datasets

Label Accuracy

- Percentage of labels matching gold set

- Target: >95% for critical applications

Labeling Speed

- Time per label (track for efficiency, not as primary quality metric)

- Watch for anomalies (too fast = careless, too slow = confusion)

Coverage

- Percentage of data successfully labeled

- Identify systematic gaps in coverage

Common Mistakes (And How to Avoid Them)

Let me save you some pain by highlighting the mistakes I see constantly:

Mistake #1: Optimizing for Cost Per Label

The Trap: Choosing the cheapest labeling service without considering quality, leading to high rework costs and poor model performance.

The Fix: Optimize for Total Cost of Ownership, including rework, model performance, and opportunity cost of delays.

Mistake #2: Treating Labeling as One-Time Task

The Trap: Assuming you label data once and you’re done, ignoring data drift and changing requirements.

The Fix: Build continuous labeling pipelines that adapt to production feedback and evolving business needs.

Mistake #3: Ignoring Class Imbalance

The Trap: Training on imbalanced datasets (e.g., 99% “normal,” 1% “fraud”) without addressing the imbalance.

The Fix: Use synthetic data, resampling techniques, or cost-sensitive learning to handle imbalanced classes.

Mistake #4: Skipping Pilot Batches

The Trap: Scaling labeling to full dataset before validating ontology and instructions.

The Fix: Always start with a pilot batch of 100-1,000 examples, measure IAA, refine guidelines, then scale.

Mistake #5: No Feedback Loop to Labelers

The Trap: Labelers never learn if they’re doing well or poorly, leading to systematic errors.

The Fix: Provide regular feedback, gold set performance scores, and opportunities for annotators to ask questions.

Strategic Roadmap: What to Do Monday Morning

Here’s your action plan for building a data labeling operation that actually works:

Phase 1: LLM AI Audit (Week 1)

Assess Current State

- What data do you have?

- What’s the quality of existing labels?

- What are your LLM AI model performance gaps?

Define Requirements

- What labels do you need?

- What’s your target quality threshold?

- What’s your budget and timeline?

Identify Approach

- In-house vs. outsourced?

- Manual vs. programmatic vs. synthetic?

- What tools will you use?

Phase 2: Pilot (Weeks 2-4)

Build Ontology

- Define clear labeling guidelines

- Create examples of edge cases

- Document decision criteria

Label Pilot Batch

- 100-1,000 examples

- Multiple annotators per example

- Measure IAA and accuracy

Refine Process

- Update guidelines based on confusion

- Adjust labeling interface

- Validate approach before scaling

Phase 3: Scale (Months 2-3)

Implement Hybrid Strategy

- Programmatic labeling for easy cases

- Active learning to identify hard cases

- Human experts for critical examples

Build QA Pipeline

- Gold set validation

- Automated quality checks

- Regular annotator feedback

Integrate with MLOps

- Connect LLM AI labeling to training pipeline

- Track data lineage

- Enable continuous learning

Phase 4: Optimize (Ongoing)

Monitor Performance

- LLM AI model accuracy on labeled data

- Production performance metrics

- Cost per quality label

Iterate Based on Feedback

- Identify model failure modes

- Collect edge cases from production

- Continuously improve dataset

Scale Infrastructure

- Automate what works

- Build institutional LLM AI knowledge

- Document best practices

When to Partner with Experts (And When to Build In-House)

Look, I run a software development company. You’d expect me to tell you to outsource everything. But that’s not always the right answer.

Build In-House When:

You Have Highly Specialized Domain Knowledge If your labeling requires deep expertise that’s core to your business (e.g., proprietary medical imaging), keep it in-house.

You’re Handling Sensitive IP If your LLM AI training data represents competitive advantage or contains trade secrets, maintaining control is worth the overhead.

You Have Scale and Resources If you’re labeling millions of examples continuously, building internal infrastructure might be more cost-effective long-term.

Partner with LLM AI Experts When:

You’re Just Getting Started Early-stage teams should focus on product-market fit, not building labeling infrastructure. Partner to move fast.

You Need Specialized Tools or Workforce If you need managed annotation teams, specialized tools, or expertise you don’t have in-house, partnering accelerates time-to-market.

You’re Scaling Rapidly When you need to go from 10,000 labels to 10 million labels, partners with existing infrastructure can scale faster than you can hire.

You Want to Focus on Core Competencies If data labeling isn’t your competitive advantage, outsource it and focus resources on what differentiates your product.

The LLM AI Hybrid Approach (Often Best)

Most successful teams use a hybrid model:

- Internal team defines ontology and validates quality

- External partners handle high-volume labeling

- Programmatic/synthetic approaches reduce manual work

- Active learning focuses human effort on high-value examples

At Iterators, we help clients build this hybrid LLM AI infrastructure—from defining labeling strategies to integrating with production ML pipelines, as demonstrated in our client case studies. We’ve seen what works (and what fails spectacularly) across healthcare, fintech, autonomous systems, and enterprise AI.

The Future: LLM AI Labeling LLM AI

The trajectory is clear: LLM AI will increasingly label LLM AI.

RLAIF (Reinforcement Learning from AI Feedback) and synthetic data generation are driving the marginal cost of “general” labeling toward zero. The value is retreating into the niche, the proprietary, and the expert.

The competitive advantage of the future won’t be who can label a stop sign (solved problem). The intersection of AI with other emerging technologies is creating new opportunities—learn about AI in blockchain applications that require novel approaches to data validation and consensus. It will be who has proprietary datasets of:

- Successful pancreatic surgery outcomes

- High-risk fintech transaction patterns

- Rare material failure modes

- Edge cases in autonomous driving

These datasets, labeled by the world’s best experts, will be the moats that define the next generation of LLM AI companies.

Conclusion: LLM AI Data Is the New Code

LLM AI data labeling is the programming language of the future

Just as we spent decades refining software engineering practices—version control, unit testing, CI/CD—we must now apply that same engineering rigor to our data operations.

The organizations that master this “Data-Centric” discipline will build the AI systems that define the next decade. Those that treat data as a commodity will remain trapped in the cycle of proof-of-concept failure.

Here’s the bottom line:

Your AI model is only as good as the data you feed it. You can have the most sophisticated architecture in the world, but if your training data is garbage, your model will confidently produce garbage.

The good news? Fixing your data labeling process is probably the highest-leverage thing you can do right now. It doesn’t require a PhD in machine learning. It doesn’t require bleeding-edge research. It just requires treating data with the same engineering discipline you apply to code.

Start small. Label a pilot batch. Measure quality. Iterate. Scale what works.

And if you need help navigating this landscape—from choosing the right approach to building production-grade labeling pipelines—we’d love to talk. At Iterators, we’ve helped dozens of companies build AI systems that actually work in production, and data quality is where it all starts.

Frequently Asked Questions

How long does data labeling typically take?

It depends on complexity and volume. Simple image classification might take seconds per image. Complex medical imaging or legal document analysis can take minutes to hours per example. For a typical ML project, expect 2-4 weeks for a pilot batch and 2-3 months to label a production dataset.

What’s the difference between data labeling and data annotation?

They’re often used interchangeably, but technically: annotation is the broader process of adding metadata to data (timestamps, tags, notes), while labeling specifically refers to assigning categories or values for ML training. In practice, most people use “labeling” to mean both.

Can AI automate data labeling?

Partially. AI can handle simple, high-confidence cases through programmatic labeling and active learning. But humans are still essential for establishing ground truth, handling edge cases, and validating quality. The future is hybrid: AI for scale, humans for nuance.

How do I calculate how much training data I need?

Rule of thumb: you need at least 10x examples per class as you have features, but this varies wildly. Start with a few hundred labeled examples per class, train a baseline model, and use learning curves to estimate how much more data would improve performance. Active learning can reduce requirements by 30-70%.

What are the biggest data labeling mistakes to avoid?

- Optimizing for cheapest cost-per-label without considering quality;

- Treating labeling as one-time task instead of continuous process;

- Skipping pilot batches to validate ontology;

- Ignoring class imbalance;

- No feedback loop to labelers.

Is it worth building an in-house labeling team?

It depends on your scale, domain expertise, and IP sensitivity. Build in-house if you have highly specialized domain knowledge, sensitive IP, or massive continuous labeling needs. Partner with experts if you’re early-stage, need to scale rapidly, or want to focus resources on core competencies.

How do I measure data labeling quality?

Track Inter-Annotator Agreement (IAA) using metrics like Cohen’s Kappa or Krippendorff’s Alpha (target >0.8). Measure label accuracy against gold sets (target >95% for production). Monitor labeling speed for anomalies. Track coverage to identify systematic gaps.

What should I look for in a data labeling partner?

Domain expertise in your industry, proven quality assurance processes, transparent pricing, integration with your MLOps stack, compliance with relevant regulations (HIPAA, GDPR), and references from similar projects. Avoid partners who can’t explain their QA methodology or don’t provide visibility into annotator performance.