Picture this: A radiologist sits down with their morning coffee, ready to review 200 chest X-rays. Without advanced healthcare software, this would take hours of intense concentration, with the ever-present risk of missing a subtle pneumonia shadow on scan #147 when fatigue sets in.

Now imagine that same radiologist working alongside an AI system that’s already flagged the 12 most concerning cases, highlighted potential problem areas, and provided preliminary assessments—all before that first sip of coffee gets cold.

This isn’t science fiction. It’s happening right now in hospitals worldwide, powered by Deep Learning Applications in Predictive Healthcare. These sophisticated AI systems are transforming how healthcare organizations predict patient outcomes, prevent complications, and deliver personalized care at unprecedented scale.

Here’s the reality check: Healthcare is drowning in data but starving for actionable insights. Healthcare software is finally changing this equation. The average hospital generates 50 petabytes of data annually—that’s roughly 50 million gigabytes—yet most of it sits unused in digital archives. Meanwhile, physicians are overwhelmed, patients wait longer for diagnoses, and healthcare costs continue their relentless climb.

Deep learning is changing this equation fundamentally. Unlike traditional analytics that require humans to manually identify patterns, deep learning systems discover complex relationships in medical data that even expert clinicians might miss. The technology has evolved from academic curiosity to clinical necessity, with the FDA approving over 500 AI/ML-enabled medical devices as tracked by FDA’s AI/ML device database.

But here’s what the glossy vendor presentations won’t tell you: implementing healthcare software isn’t about plugging in an algorithm and watching magic happen. It’s about navigating HIPAA compliance minefields, integrating with legacy EHR systems that were designed when flip phones were cutting-edge, managing change-resistant clinical workflows, and—most critically—building solutions that actually improve patient outcomes rather than just generating impressive demo videos.

This guide cuts through the hype. We’ll show you exactly how deep learning is transforming healthcare across seven high-impact applications, what it actually takes to implement these systems in production environments, and how to avoid the expensive mistakes that sink most healthcare AI projects before they deliver value.

Whether you’re a CTO evaluating AI investments, a product manager building healthtech solutions, or a healthcare executive exploring digital transformation, you’ll walk away with a clear roadmap for leveraging deep learning to deliver measurable improvements in patient care—without the usual vendor fairy tales.

Ready to turn that roadmap into results? If you’re wrestling with HIPAA requirements, legacy EHR integration, and real-world clinical workflows—and want measurable outcomes instead of another flashy demo—schedule a free consultation with Iterators. In 30 minutes, we’ll help pinpoint your highest-impact use cases, de-risk integration, and outline a pilot clinicians will actually adopt.

What is Deep Learning in Healthcare Software? (And Why It Matters Now)

Let’s start by clearing up the confusion that plagues most conversations about healthcare software.

When healthcare executives talk about “AI,” they’re usually conflating three distinct technologies: traditional machine learning, deep learning, and now generative AI. Understanding these differences isn’t academic nitpicking—it’s the foundation for making smart investment decisions. For a deeper dive into this distinction, see our comprehensive guide on machine learning vs deep learning.

Deep Learning Applications in Healthcare Software vs. Traditional Machine Learning

Traditional machine learning in healthcare works like a very sophisticated checklist. You feed it patient data—lab results, vital signs, demographics—and it applies statistical rules to make predictions. If blood pressure is above X and cholesterol is above Y and the patient is over age Z, then flag for cardiovascular risk.

This approach works beautifully for structured, tabular data. It’s why ML has been successfully predicting hospital readmissions and identifying high-risk patients for years. But it hits a wall when confronting the messy reality of most medical data: radiology images, pathology slides, physician notes, genomic sequences, and real-time monitoring streams.

Enter deep learning.

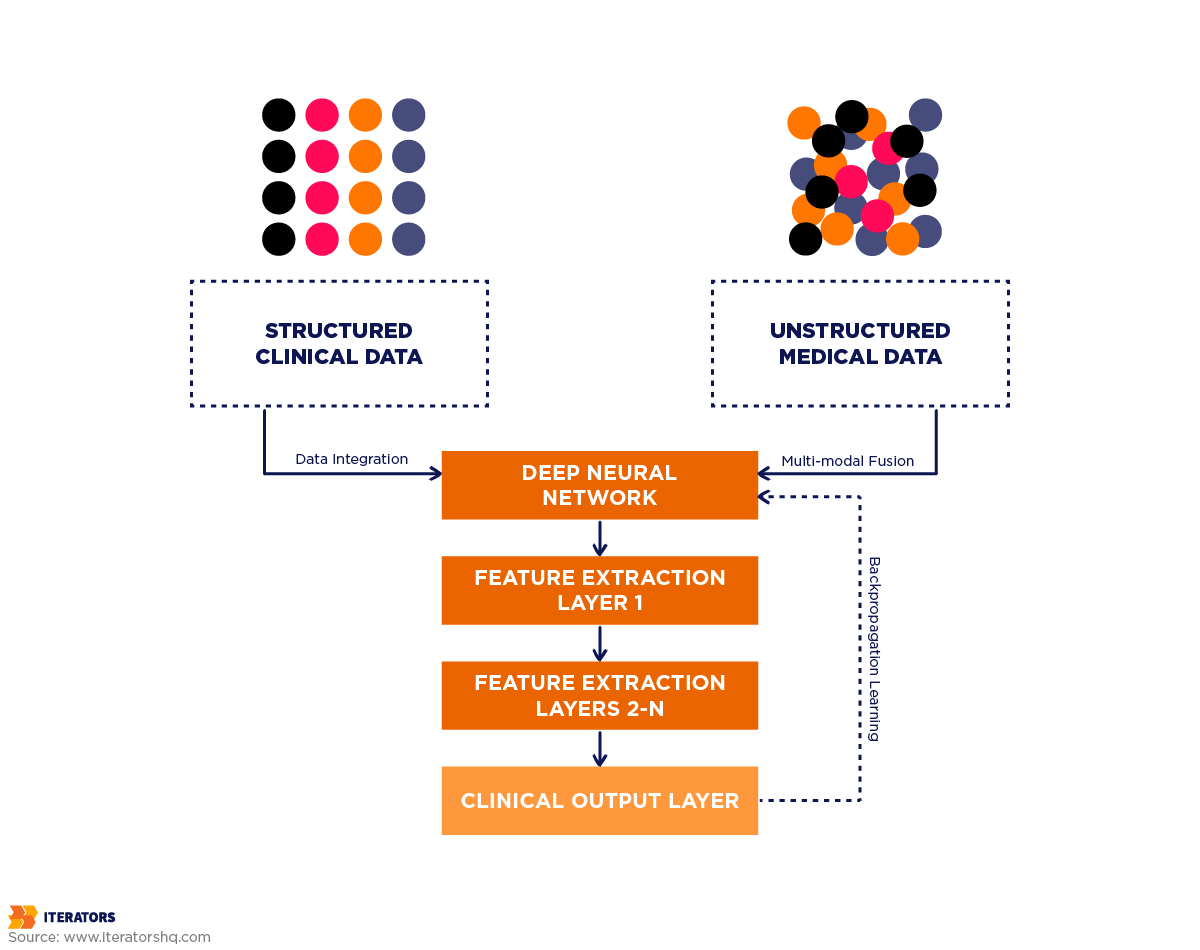

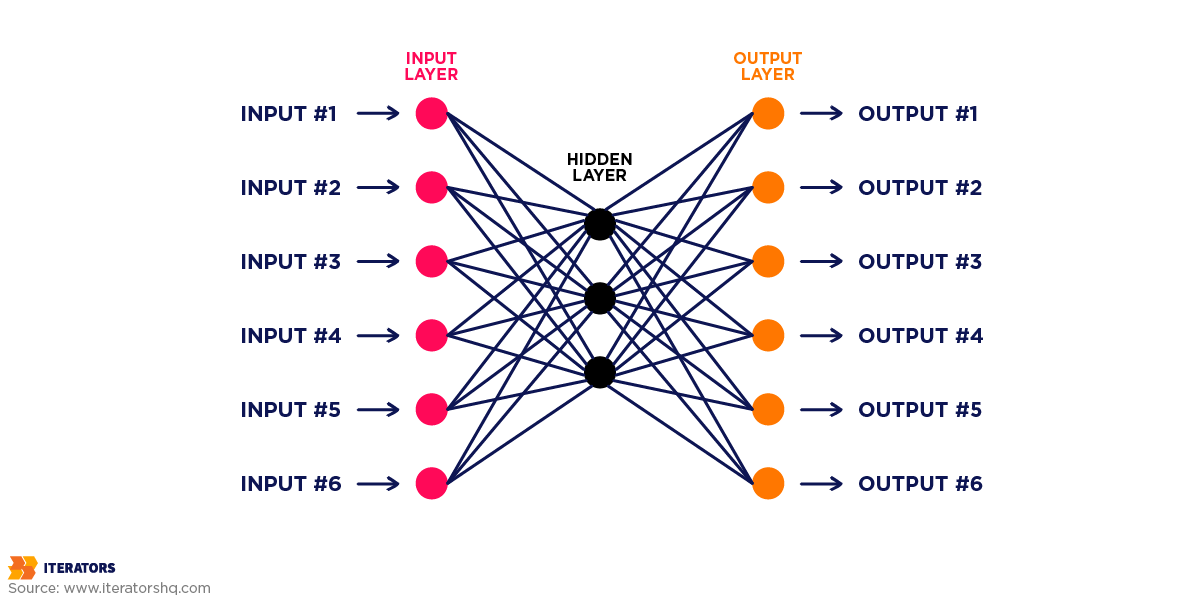

Deep learning uses artificial neural networks—mathematical structures loosely inspired by how neurons connect in the brain—to automatically discover patterns in raw, unstructured data. The “deep” part refers to multiple layers of these artificial neurons, each learning progressively more complex features.

Here’s why this matters in healthcare: When analyzing a chest X-ray for pneumonia, traditional ML requires radiologists to manually define features (“look for this specific opacity pattern in this lung region”). Deep learning systems learn these features automatically by studying thousands of labeled X-rays, often discovering subtle patterns that human experts never explicitly articulated.

The practical difference? Traditional ML might achieve 85% accuracy on a well-defined task with carefully engineered features. Deep learning routinely hits 95%+ on the same task—and can handle vastly more complex problems that would be impossible to manually feature-engineer.

But here’s the catch that vendors conveniently omit: deep learning demands massive amounts of labeled training data, significant computational resources, and—critically—the right problem fit. Deploying a deep learning solution for a task that traditional ML handles perfectly well is like using a nuclear reactor to power a nightlight. Expensive, complicated, and entirely unnecessary.

Why Deep Learning Applications in Healthcare Software Are Exploding in 2025

Three converging forces have transformed deep learning from research curiosity to clinical imperative:

1. The Data Deluge Has Reached Critical Mass

Electronic Health Records (EHRs) have been widely adopted for over a decade now, creating unprecedented data repositories. The average hospital now generates 50 petabytes of data annually—and that’s before adding genomic data (which can reach 100 gigabytes per patient), continuous monitoring streams from ICU equipment, and high-resolution medical imaging.

This data explosion created a paradox: healthcare organizations were data-rich but insight-poor. They had the raw material for transformative analytics but lacked tools to extract value at scale. Deep learning finally provides those tools.

2. Computational Power Became Accessible

Training deep learning models used to require supercomputer-level resources. In 2012, the breakthrough AlexNet image recognition model required a week of training on high-end GPUs. Today, cloud platforms offer on-demand access to even more powerful infrastructure for pennies per hour.

More importantly, specialized AI chips (like Google’s TPUs and NVIDIA’s medical imaging GPUs) have made real-time inference practical. A deep learning model that took 30 seconds to analyze an image in 2015 now delivers results in under a second—fast enough for clinical workflows.

3. The Regulatory Path Cleared

The FDA’s 2021 Artificial Intelligence/Machine Learning (AI/ML)-Based Software as a Medical Device (SaMD) Action Plan provided the regulatory clarity the industry desperately needed. Rather than treating each model update as a new device requiring full approval, the framework allows “predetermined change control plans”—essentially pre-approved pathways for iterative improvement.

This regulatory evolution is crucial because deep learning models improve continuously as they process more data. The old regulatory model would have frozen models at their initial (inferior) performance levels. The new approach enables the continuous learning that makes these systems genuinely valuable.

But the real catalyst? COVID-19.

The pandemic forced healthcare to confront its digital infrastructure gaps while simultaneously demonstrating AI’s potential. Systems that could predict patient deterioration, optimize resource allocation, and accelerate drug discovery moved from “nice to have” to “essential for survival.” That mindset shift persists even as the pandemic recedes.

The result: According to McKinsey’s healthcare transformation report, healthcare software AI investment reached $11.8 billion in 2024, with deep learning applications capturing the majority of that capital. More telling, 76% of health systems now have AI initiatives in active development or production—up from just 23% in 2019. Organizations exploring this space need strategic guidance on AI’s role in healthcare management and development.

The question is no longer “Should we explore deep learning?” but rather “Which applications deliver the highest ROI for our specific context?”

Let’s answer that question.

7 High-Impact Deep Learning Applications in Healthcare Software

The landscape of healthcare software is littered with impressive research papers that never translate into clinical practice. We’re focusing exclusively on applications that have demonstrated measurable patient outcomes and economic value in real-world deployments—not just laboratory benchmarks.

1. Early Disease Detection and Diagnosis

The Problem: Many diseases are far more treatable when caught early, but early-stage symptoms are subtle and easily missed. Radiologists reviewing hundreds of scans daily face cognitive fatigue. Screening programs struggle with false positive rates that lead to unnecessary procedures and patient anxiety.

The Deep Learning Solution:

Convolutional Neural Networks (CNNs)—a specialized deep learning architecture designed for image analysis—have achieved superhuman performance in detecting specific pathologies from medical images.

Real-World Impact: Cancer Detection

The most compelling validation for deep learning in healthcare software comes from breast cancer screening. A 2020 study published in Nature found that Google Health’s deep learning system reduced false positives by 5.7% and false negatives by 9.4% compared to radiologists in the US setting. In the UK setting, where double-reading is standard, the AI system matched the performance of two radiologists working together.

Think about what those percentages mean in human terms: In a screening population of 100,000 women, that 9.4% reduction in false negatives translates to roughly 94 cancers detected that would have been missed—94 women who get treatment before their cancer progresses to advanced stages.

The economic impact is equally significant. False positives in mammography lead to additional imaging, biopsies, and patient anxiety. Reducing false positives by 5.7% in that same 100,000-woman population prevents approximately 5,700 unnecessary follow-up procedures, saving roughly $2.8 million in direct costs while reducing patient stress and improving screening program efficiency.

Diabetic Retinopathy: From Research to Global Deployment

Google’s diabetic retinopathy detection system demonstrates the path from research to real-world impact. The system analyzes retinal photographs to detect diabetic retinopathy—the leading cause of blindness in working-age adults—with sensitivity and specificity exceeding 90%.

But here’s what makes this more than a research curiosity: The system has been deployed in screening programs across India and Thailand, where ophthalmologist shortages make traditional screening impractical. In these settings, the AI system enables primary care physicians and even non-physician screeners to identify patients needing specialist referral, dramatically expanding access to sight-saving care.

The lesson: Deep learning’s highest impact often comes not from replacing expert physicians in resource-rich settings, but from extending expert-level capabilities to underserved populations.

Technical Reality Check:

Training these diagnostic models requires massive, carefully labeled datasets. Google’s breast cancer system was trained on 91,000 mammograms. Their diabetic retinopathy model used 128,000 retinal images. Smaller healthcare organizations can’t typically generate datasets of this scale internally.

The practical path forward involves either:

- Partnering with vendors who’ve already trained robust models on large datasets

- Participating in federated learning initiatives that allow model training across multiple institutions without sharing raw patient data

- Fine-tuning pre-trained models on your specific patient population (which requires far less data)

2. Patient Risk Stratification and Readmission Prediction

The Problem: Hospital readmissions within 30 days cost the US healthcare system $26 billion annually. Medicare penalizes hospitals with high readmission rates, creating financial pressure to identify high-risk patients before discharge. But traditional risk scores (like the LACE index) miss many patients who will deteriorate after leaving the hospital.

The Deep Learning Solution:

Recurrent Neural Networks (RNNs) and their more sophisticated variants (LSTMs and GRUs) excel at analyzing sequential data—perfect for patient timelines where the order and timing of events matter.

These models ingest the complete patient record: demographics, diagnoses, medications, lab results, vital signs, nursing notes, and social determinants of health. Unlike traditional scoring systems that rely on a handful of manually selected variables, deep learning models consider hundreds of factors and their complex interactions.

Real-World Impact: Mount Sinai’s Deep Patient

Mount Sinai Health System developed “Deep Patient,” a deep learning system that analyzes EHR data to predict patient risks across multiple conditions. The system demonstrated impressive performance:

- 93% accuracy predicting severe diabetes (vs. 80% for traditional methods)

- 85% accuracy predicting schizophrenia onset

- 80% accuracy predicting various cancers

But the real innovation isn’t just better prediction—it’s actionable intervention. The system doesn’t just flag high-risk patients; it identifies which specific factors drive their risk, enabling targeted interventions.

For example, a patient flagged for high readmission risk due to medication non-adherence triggers a pharmacy follow-up call and enrollment in a medication management program. A patient at risk due to social factors (unstable housing, food insecurity) gets connected with social services before discharge.

Economic Impact:

Reducing readmissions by even 10% in a 500-bed hospital saves approximately $2-3 million annually in avoided penalties and reduced care costs. More importantly, it prevents the patient suffering and complications associated with preventable deterioration.

Implementation Reality:

The challenge isn’t building the model—it’s integrating predictions into clinical workflows in ways that actually change behavior. Physicians already suffer from alert fatigue; adding another risk score they’re supposed to check accomplishes nothing.

Successful implementations embed predictions directly into existing workflows:

- Automatic alerts to discharge planners for high-risk patients

- Pre-populated intervention orders based on identified risk factors

- Integration with care management platforms that track follow-up

- Dashboards that let clinical leaders monitor risk patterns across patient populations

3. Personalized Treatment Recommendations

The Problem: The same diagnosis doesn’t mean the same treatment should work for everyone. A breast cancer patient might respond brilliantly to one chemotherapy regimen while experiencing terrible side effects and poor outcomes with another. Traditional treatment protocols use population-level evidence—what works for most patients—but that “most” might not include you.

The Deep Learning Solution:

Deep learning models can analyze a patient’s complete molecular profile (genomics, proteomics, metabolomics), medical history, demographics, and outcomes data from thousands of similar patients to predict which treatments are most likely to work—and which will cause problematic side effects.

This represents the pinnacle of deep learning in healthcare software—precision medicine at scale. Instead of trial-and-error treatment selection, physicians get data-driven recommendations personalized to each patient’s unique biology.

Real-World Impact: Oncology Treatment Selection

IBM Watson for Oncology analyzes patient records against a knowledge base of medical literature, clinical guidelines, and treatment outcomes to recommend personalized cancer treatment plans. While the system faced early criticism for recommendations that sometimes diverged from expert opinion, its value lies in surfacing treatment options that oncologists might not have considered—particularly for rare cancers or complex cases.

More promising results come from academic medical centers using deep learning for treatment response prediction. Memorial Sloan Kettering’s system predicts chemotherapy response in ovarian cancer patients with 80% accuracy, helping oncologists avoid ineffective treatments that would cause side effects without benefit.

Pharmacogenomics: Predicting Drug Response

Deep learning models analyzing genetic variants can predict how patients will metabolize specific medications—crucial for drugs with narrow therapeutic windows where too much causes toxicity and too little provides no benefit.

For example, warfarin dosing varies dramatically between patients due to genetic differences. Traditional dosing algorithms consider a handful of genetic markers. Deep learning models analyzing thousands of genetic variants achieve significantly more accurate dose predictions, reducing the time to therapeutic dose and minimizing bleeding complications.

The Data Challenge:

Personalized treatment prediction requires linking treatment decisions to outcomes—and capturing why specific treatments were chosen. Most EHR systems don’t systematically record clinical reasoning, making it difficult to train models that understand the nuances of treatment selection.

Organizations building these capabilities need to:

- Implement structured clinical decision support that captures reasoning

- Participate in clinical data networks that pool outcomes data across institutions

- Invest in natural language processing to extract insights from unstructured clinical notes

- Build feedback loops that continuously update models as new outcomes data arrives

4. Medical Imaging Analysis and Radiology

The Problem: Radiologists are drowning. The volume of medical imaging has increased 10-fold over the past decade while radiologist supply has remained relatively flat. The average radiologist now interprets one image every 3-4 seconds during an 8-hour shift—a pace that virtually guarantees missed findings.

The Deep Learning Solution:

CNNs can analyze medical images in seconds, flagging potential abnormalities for radiologist review. The key insight: this isn’t about replacing radiologists, it’s about optimizing their cognitive load.

Real-World Impact: Stanford’s CheXNet

Stanford’s CheXNet system analyzes chest X-rays for 14 different pathologies (pneumonia, cardiomegaly, effusions, etc.) with performance exceeding the average of four radiologists. The system was trained on 112,120 frontal-view X-ray images.

But the real value emerges in deployment. Rather than providing a simple yes/no diagnosis, the system generates:

- Probability scores for each pathology

- Heat maps highlighting suspicious regions

- Comparison to similar cases in the training set

- Confidence intervals for predictions

This rich output helps radiologists work more efficiently. Instead of scrutinizing every pixel of every image, they can focus attention on the cases and regions the AI flags as concerning. Studies show this approach reduces interpretation time by 30-40% while improving diagnostic accuracy.

Beyond Detection: Quantification and Progression

Deep learning excels at tasks that are tedious and time-consuming for humans but critical for treatment planning. For example:

- Tumor volumetrics: Precisely measuring tumor size in 3D from CT or MRI scans to track treatment response

- Organ segmentation: Automatically outlining organs in radiation therapy planning to protect healthy tissue

- Progression tracking: Comparing current and prior scans to quantify disease progression or treatment response

These quantitative analyses used to require 30-60 minutes of manual work by specialized technicians. Deep learning systems complete them in seconds with greater consistency.

Technical Considerations:

Medical imaging AI faces unique challenges:

- Scanner variability: Images from different manufacturers and models have different characteristics. Models trained on GE scanners may perform poorly on Siemens images.

- Annotation quality: Training requires expert radiologist annotations, which are expensive and time-consuming to obtain at scale.

- Rare findings: Pathologies that appear in <1% of scans are difficult to learn from limited examples.

Successful implementations address these through:

- Transfer learning from models pre-trained on large general image datasets

- Data augmentation techniques that artificially expand training sets

- Federated learning across multiple institutions to access diverse scanner types

- Active learning approaches that prioritize labeling of the most informative cases

5. Natural Language Processing for Clinical Documentation

The Problem: Physicians spend 2+ hours on documentation for every hour of direct patient care. Clinical notes contain critical information but in unstructured text that’s difficult to search, analyze, or incorporate into decision support. The result: valuable insights trapped in narrative notes that might as well not exist for analytical purposes.

The Deep Learning Solution:

Transformer-based language models (the same architecture powering ChatGPT) can extract structured information from clinical notes, automatically code diagnoses and procedures, and even generate documentation from voice dictation.

Real-World Impact: Automated Clinical Coding

Clinical coding—translating physician documentation into billing codes (ICD-10, CPT)—is tedious, expensive, and error-prone. Professional coders spend hours reviewing charts to identify billable conditions and procedures.

Deep learning NLP systems achieve 90%+ accuracy on automated coding for common conditions, reducing coding time by 60-70%. More importantly, they catch billable conditions that human coders miss, increasing revenue capture while reducing the documentation burden on physicians.

Extracting Insights from Unstructured Notes

Clinical notes contain information that never makes it into structured EHR fields: treatment response details, symptom progression, medication side effects, patient preferences, and social context.

NLP systems extract this information to:

- Identify patients with specific characteristics for clinical trial recruitment

- Detect adverse drug reactions from symptom descriptions

- Track disease progression through symptom severity mentions

- Identify gaps in care (e.g., recommended follow-up tests that weren’t ordered)

Voice-to-Documentation:

Modern clinical documentation platforms use deep learning speech recognition combined with NLP to generate structured notes from physician dictation. The physician speaks naturally about the patient encounter; the system generates a properly formatted note with appropriate sections, extracts diagnoses and medications, and suggests billing codes.

Early implementations reduced documentation time by 30-40% while improving note completeness and quality.

The Privacy Imperative:

Clinical NLP systems process the most sensitive patient information—detailed symptom descriptions, psychiatric history, substance use, sexual health. This demands:

- HIPAA-compliant infrastructure with encryption at rest and in transit

- Strict access controls and audit logging

- De-identification capabilities that remove protected health information before data leaves secure environments

- On-premise deployment options for organizations that can’t use cloud services for PHI

Organizations implementing clinical NLP need legal review of vendor contracts, security assessments of infrastructure, and clear data governance policies—before processing the first note.

6. Drug Discovery and Development Acceleration

The Problem: Bringing a new drug to market takes 10-15 years and costs $2.6 billion on average. The vast majority of drug candidates fail in clinical trials—often after hundreds of millions have been invested. The traditional approach of screening millions of molecules through laboratory experiments is simply too slow and expensive.

The Deep Learning Solution:

Deep learning models can predict molecular properties, simulate drug-target interactions, and identify promising drug candidates from billions of possibilities—all in silico (on computers) before synthesizing a single molecule in the lab.

Real-World Impact: COVID-19 Vaccine Development

The unprecedented speed of COVID-19 vaccine development—less than a year from virus sequencing to emergency authorization—was enabled in part by AI-driven molecular design. Deep learning models predicted which spike protein variants would generate strong immune responses while remaining stable enough for vaccine manufacturing.

BioNTech used AI to design the mRNA sequence for their vaccine, optimizing for protein expression, immune response, and manufacturing stability simultaneously—a multi-objective optimization problem that would be intractable through traditional methods.

Protein Structure Prediction: AlphaFold’s Revolution

DeepMind’s AlphaFold system solved one of biology’s grand challenges: predicting 3D protein structure from amino acid sequence. This breakthrough accelerates drug discovery by revealing exactly how drug molecules might bind to disease-related proteins.

The impact: What used to take months of laboratory work (crystallizing proteins and analyzing them with X-ray crystallography) now takes hours of computation. AlphaFold has predicted structures for over 200 million proteins—essentially all known proteins—creating an unprecedented resource for drug discovery.

Clinical Trial Optimization:

Deep learning models analyzing electronic health records can identify patients who match complex trial eligibility criteria—accelerating recruitment for clinical trials. They can also predict which patients are most likely to respond to investigational treatments, enabling smaller, more efficient trials.

For rare diseases where patient populations are small, this capability is transformative. Instead of screening thousands of patients to find dozens who qualify, AI pre-screening identifies qualified candidates directly from EHR data.

Economic Impact:

Even modest improvements in drug development efficiency generate massive value. If deep learning tools reduce development timelines by 2 years and increase success rates by 10%, the economic value exceeds $100 billion annually across the pharmaceutical industry.

More importantly, faster development means patients access life-saving treatments years earlier—value that’s difficult to quantify but profoundly meaningful.

The Validation Challenge:

Drug discovery AI faces a unique problem: validation takes years. A model that predicts a molecule will make a good drug candidate can’t be proven right or wrong until that molecule completes clinical trials—5-10 years later.

This delayed feedback loop means:

- Model improvements are slow because you can’t rapidly iterate based on outcomes

- Overfitting to historical data is a constant risk

- Explainability is critical—drug developers need to understand why the model made specific predictions

Organizations deploying drug discovery AI need patience, strong domain expertise to sanity-check predictions, and robust experimental validation pipelines to test promising candidates.

7. Predictive Patient Monitoring and ICU Management

The Problem: Patient deterioration in hospitals often follows a predictable pattern of vital sign changes—but by the time traditional early warning scores trigger alerts, the patient is already critically ill. Intensive care units generate massive streams of monitoring data (heart rate, blood pressure, oxygen saturation, ventilator settings, lab results) but clinicians can’t possibly track all these signals simultaneously for all patients.

The Deep Learning Solution:

Recurrent neural networks analyzing continuous monitoring data can detect subtle patterns that precede clinical deterioration—often hours before traditional alert systems trigger. This enables proactive intervention before patients crash.

Real-World Impact: Sepsis Prediction

Sepsis—the body’s extreme response to infection—kills 270,000 Americans annually and costs $27 billion to treat. Early recognition and treatment dramatically improve outcomes, but sepsis is notoriously difficult to identify in early stages.

Johns Hopkins developed a deep learning system that predicts sepsis onset 12-48 hours before clinical diagnosis by analyzing patterns in vital signs, lab results, and clinical notes. The system achieves 85% sensitivity with 5% false positive rate—far better than traditional screening tools.

Deployed across Johns Hopkins hospitals, the system reduced sepsis mortality by 18% and decreased sepsis-related costs by $1.5 million annually per hospital. The key: nurses receive alerts with sufficient lead time to start antibiotics and fluid resuscitation before patients deteriorate to septic shock.

Cardiac Arrest Prediction:

Mayo Clinic’s AI system analyzes continuous ECG monitoring to predict cardiac arrest 10-30 minutes before it occurs. This brief warning window allows rapid response teams to reach the patient before arrest, dramatically improving survival rates.

The system identifies subtle ECG changes invisible to human observers—patterns that only emerge when analyzing millions of heartbeats across thousands of patients.

Ventilator Weaning Optimization:

Deep learning models analyzing ventilator data, blood gases, and patient characteristics can predict optimal timing for extubation (removing the breathing tube). Extubating too early leads to reintubation—associated with higher mortality and longer ICU stays. Extubating too late unnecessarily extends ICU time and increases complication risk.

AI-guided weaning protocols reduce time on mechanical ventilation by 20-30% while maintaining safety—freeing ICU beds and reducing ventilator-associated pneumonia.

Implementation Reality:

Predictive monitoring systems face the alert fatigue problem on steroids. ICU staff already deal with dozens of alerts per patient per day—most false alarms. Adding AI predictions that are wrong 5-15% of the time risks getting ignored.

Successful implementations require:

- Tiered alert systems: Only the highest-risk predictions trigger immediate alerts; lower-risk predictions appear in dashboards for periodic review

- Actionable guidance: Alerts include specific recommended interventions, not just “patient at risk”

- Feedback loops: Clinicians can mark alerts as helpful/unhelpful, allowing continuous model improvement

- Integration with workflows: Predictions feed into existing rapid response team protocols rather than creating new workflows

Deep Learning Applications in Healthcare Software: Technical Foundation and Implementation

Understanding the technical architecture isn’t just for developers—it’s essential for anyone making investment decisions about healthcare software.The gap between research demos and production systems is where most projects fail.

Neural Network Architectures for Medical Data

Different types of medical data require different neural network architectures. Using the wrong architecture is like trying to cut steak with a spoon—technically possible but wildly inefficient.

Convolutional Neural Networks (CNNs) for Medical Imaging:

CNNs are specifically designed for image analysis. They work by applying learned filters that detect features at multiple scales—edges and textures in early layers, complex patterns like tumors or fractures in deeper layers.

For medical imaging, CNNs typically use architectures like:

- ResNet: Excellent for general radiology tasks; handles very deep networks (100+ layers) that can learn subtle patterns

- U-Net: Designed for medical image segmentation (outlining organs, tumors); works well with limited training data

- DenseNet: Efficient parameter usage; good for scenarios where computational resources are limited

Recurrent Neural Networks (RNNs) for Sequential Data:

RNNs process sequences—perfect for patient timelines where events unfold over time. Variants include:

- LSTMs (Long Short-Term Memory): Can remember patterns over long sequences; ideal for analyzing patient histories spanning months or years

- GRUs (Gated Recurrent Units): Simpler than LSTMs but often perform similarly; faster to train

- Temporal Convolutional Networks: Alternative to RNNs that often train faster; good for real-time monitoring data

Transformers for Clinical Text:

The same architecture powering ChatGPT revolutionized clinical NLP. Transformers excel at understanding context and relationships in text:

- BERT variants (BioBERT, ClinicalBERT): Pre-trained on medical literature and clinical notes; excellent for extracting information from EHRs

- GPT variants: Can generate clinical documentation, summarize patient histories, answer clinical questions

Graph Neural Networks for Molecular Data:

Drugs and proteins are naturally represented as graphs (atoms as nodes, bonds as edges). Graph neural networks learn molecular properties by analyzing these graph structures—critical for drug discovery applications.

Training Data Requirements and Quality Considerations

The dirty secret of deep learning: you need massive amounts of labeled data. “Massive” in healthcare software typically means:

- Diagnostic imaging: 10,000-100,000 labeled images per pathology

- Clinical NLP: 50,000-500,000 annotated notes

- Risk prediction: 100,000+ patient records with outcome labels

- Drug discovery: Millions of molecules with measured properties

But quantity alone isn’t enough. Data quality determines model quality:

Labeling Quality:

Medical data labeling requires expert clinicians—expensive and time-consuming. A single radiologist might label 20-30 images per hour; training a production imaging model could require 2,000-3,000 hours of expert time.

Quality issues include:

- Inter-rater disagreement: Different experts label the same case differently

- Incomplete labeling: Subtle findings missed during labeling

- Systematic bias: Labels reflect the biases and blind spots of the labeling team

Solutions:

- Multiple expert labels per case with adjudication for disagreements

- Active learning to prioritize labeling of the most informative cases

- Regular quality audits with re-labeling of samples

Data Diversity:

Models trained on homogeneous data perform poorly on different populations. A model trained exclusively on chest X-rays from one hospital might fail at another hospital with different patient demographics or scanner equipment.

Diversity requirements:

- Demographic diversity: Representation across age, sex, race, ethnicity

- Equipment diversity: Different scanner manufacturers and models

- Disease diversity: Full spectrum of disease severity and presentation

- Temporal diversity: Data spanning multiple years to capture practice evolution

Data Leakage Prevention:

Subtle data leakage—where training data inadvertently contains information about test outcomes—creates models that perform brilliantly in development but fail in production.

Common sources:

- Using future information to predict past events (e.g., discharge medications to predict admission diagnosis)

- Including the same patient in training and test sets

- Using data generated after the prediction point

Rigorous temporal validation (training on older data, testing on newer data) catches most leakage issues before deployment.

Model Validation and Clinical Testing Standards

AI healthcare software can’t launch with “move fast and break things” Silicon Valley ethos. Breaking things means harming patients.

Internal Validation:

Before external testing, models must demonstrate robust performance across multiple dimensions:

- Discrimination: Can the model distinguish between positive and negative cases? (Measured by AUROC, sensitivity, specificity)

- Calibration: Are the model’s probability estimates accurate? (A case predicted at 80% risk should actually have ~80% probability)

- Fairness: Does performance vary across demographic groups? Disparities indicate bias.

- Robustness: How does performance degrade with missing data, unusual cases, or distribution shifts?

External Validation:

The real test: how does the model perform on completely new data from different institutions?

External validation often reveals 10-20% performance drops compared to internal testing—sometimes more. This “generalization gap” reflects overfitting to training data quirks.

Successful models maintain >80% of internal performance when externally validated. Models with larger generalization gaps need more diverse training data or architectural changes.

Clinical Validation:

The ultimate test: does the model improve patient outcomes in real clinical use?

This requires prospective studies where:

- Clinicians use the model in actual practice

- Patient outcomes are tracked and compared to control groups

- Workflow integration and usability are assessed

- Unintended consequences are monitored

Only after successful clinical validation should models be considered for broad deployment.

Regulatory Standards:

The FDA’s Software as a Medical Device framework defines validation requirements based on risk level:

- High risk (e.g., autonomous diagnostic systems): Requires clinical trials demonstrating safety and efficacy

- Moderate risk (e.g., decision support tools): Requires validation studies showing performance matches or exceeds existing methods

- Low risk (e.g., administrative automation): Minimal validation requirements

Even for lower-risk applications, following FDA guidance builds credibility and reduces liability risk.

Compliance for Deep Learning Applications in Healthcare Software: HIPAA and Security

Deep learning applications in healthcare software operate in the most regulated data environment imaginable. Compliance isn’t optional—it’s the foundation everything else builds on.

Building HIPAA-Compliant Deep Learning Applications in Healthcare Software

HIPAA (Health Insurance Portability and Accountability Act) sets strict requirements for protecting patient health information, as outlined by HHS.gov. Violations carry fines up to $1.5 million per violation category per year—and criminal penalties for willful neglect.

Technical Safeguards:

HIPAA requires “appropriate administrative, physical, and technical safeguards” to protect ePHI (electronic Protected Health Information). For deep learning systems, this means:

Encryption:

- Data at rest: AES-256 encryption for all stored data

- Data in transit: TLS 1.3 for all network communications

- Encryption key management: Hardware security modules (HSMs) or cloud KMS with strict access controls

Access Controls:

- Role-based access control (RBAC) limiting data access to authorized users

- Multi-factor authentication for all system access

- Audit logging of all data access and model training activities

- Automatic session timeouts and account lockouts

Infrastructure Security:

- Network segmentation isolating PHI from public networks

- Intrusion detection and prevention systems

- Regular vulnerability scanning and penetration testing

- Disaster recovery and backup systems with tested restoration procedures

Cloud Deployment Considerations:

Cloud platforms (AWS, Google Cloud, Azure) offer HIPAA-eligible services—but “eligible” doesn’t mean “automatically compliant.”

Requirements:

- Sign Business Associate Agreement (BAA) with cloud provider

- Use only HIPAA-eligible services (not all cloud services qualify)

- Enable all required security features (encryption, logging, access controls)

- Maintain documentation of security configurations

- Regular security assessments and compliance audits

On-Premise vs. Cloud:

Some healthcare organizations refuse to put PHI in the cloud, period. This requires:

- On-premise GPU infrastructure for model training and inference

- Dedicated IT staff for infrastructure maintenance and security

- Significant capital investment in hardware

- Longer deployment timelines

The trade-off: greater control and perceived security vs. higher costs and reduced agility.

For most organizations, cloud deployment with proper safeguards provides better security than on-premise infrastructure—cloud providers invest far more in security than typical healthcare IT departments can match.

Data Privacy and De-identification Strategies

Training deep learning models requires large datasets, but sharing PHI across institutions for model development raises privacy concerns.

De-identification:

HIPAA’s Safe Harbor method requires removing 18 types of identifiers:

- Names, addresses (except state), dates (except year)

- Phone/fax numbers, email addresses, SSNs

- Medical record numbers, account numbers

- Certificate/license numbers, vehicle identifiers

- Device identifiers, URLs, IP addresses

- Biometric identifiers, photos

- Any other unique identifying information

But here’s the problem: removing all this information can reduce data utility for model training. Dates are particularly problematic—temporal relationships are often critical for predictive models.

Expert Determination:

HIPAA’s alternative: an expert determines that the risk of re-identification is “very small” and documents the methods used. This allows retaining more information (like dates) if the expert can demonstrate low re-identification risk.

This requires:

- Statistical analysis of re-identification risk

- Documentation of all de-identification methods

- Expert certification of low risk

- Ongoing monitoring as new data sources emerge

Synthetic Data:

An emerging approach: generate synthetic patient data that preserves statistical properties of real data without containing actual patient information.

Deep learning models (GANs, variational autoencoders) can generate synthetic medical images or EHR records that look realistic but don’t correspond to any real patient.

Benefits:

- Can be shared freely without privacy concerns

- Unlimited quantity for model training

- Can oversample rare conditions to improve model performance

Limitations:

- Synthetic data may not capture all real-world complexity

- Models trained on synthetic data still need validation on real data

- Quality of synthetic data depends on quality of generation models

Federated Learning:

The most promising approach for multi-institutional collaboration: train models across multiple sites without sharing raw data.

How it works:

- Each institution trains a model on their local data

- Only model updates (not data) are shared with a central server

- Central server aggregates updates to improve a global model

- Updated global model is distributed back to institutions

- Process repeats iteratively

Benefits:

- No raw data sharing—each institution’s data stays behind their firewall

- Models benefit from diverse data across institutions

- Meets most institutional review board (IRB) requirements

Challenges:

- Requires significant coordination across institutions

- Technical complexity in handling heterogeneous data formats

- Communication overhead for model update sharing

- Potential for model updates to leak information about training data

Addressing Bias and Ensuring Equitable AI

AI Healthcare software systems can perpetuate and amplify existing healthcare disparities if not carefully designed.

Sources of Bias:

Training Data Bias: If training data underrepresents certain populations, models perform poorly for those groups. For example:

- Dermatology AI trained primarily on light skin performs poorly on dark skin

- Models trained on data from academic medical centers may not generalize to community hospitals

- Algorithms developed in high-resource settings may fail in low-resource environments

Label Bias: Human labelers bring their own biases. Studies show that:

- Black patients’ pain is systematically underestimated in clinical documentation

- Women’s cardiac symptoms are more likely to be attributed to anxiety

- Rare diseases are underdiagnosed in populations where they’re not expected

Models trained on biased labels learn to replicate those biases.

Measurement Bias: Some medical measurements are less accurate for certain populations:

- Pulse oximeters overestimate oxygen saturation in patients with dark skin

- Spirometry reference ranges were historically based on white populations

- Some lab tests have different normal ranges by race

Using biased measurements as model inputs or outputs propagates bias.

Mitigation Strategies:

Diverse Training Data: Actively ensure training data represents the full diversity of patients the model will serve:

- Track demographic composition of training data

- Oversample underrepresented groups

- Collect additional data from diverse sites if needed

Fairness Metrics: Evaluate model performance separately for different demographic groups:

- Compare sensitivity, specificity, and calibration across groups

- Test for disparate impact (do similar patients get different predictions based on protected characteristics?)

- Monitor fairness metrics in production, not just development

Algorithmic Fairness Techniques:

- Adversarial debiasing: Train models to make accurate predictions while being unable to predict protected characteristics

- Calibration constraints: Ensure probability estimates are equally accurate across groups

- Threshold optimization: Use different decision thresholds for different groups to equalize false positive/negative rates

Transparency and Explainability: Document potential biases and limitations clearly:

- Report demographic composition of training and test data

- Disclose known performance disparities

- Provide guidance on appropriate use cases and populations

- Enable clinicians to understand why specific predictions were made

Ongoing Monitoring: Bias can emerge over time as patient populations or clinical practices change:

- Continuous monitoring of performance across demographic groups

- Regular fairness audits

- Mechanisms for users to report suspected bias

- Processes for investigating and addressing identified disparities

Implementation Challenges for Deep Learning in Healthcare Software

The gap between “we built a model that works in the lab” and “we deployed a model that improves patient care” is where most AI healthcare software projects die. Let’s talk about why—and how to avoid becoming another statistic.

Data Integration and Interoperability Issues

Healthcare data is a mess. It’s scattered across incompatible systems, stored in dozens of formats, and often impossible to link across sources.

The EHR Fragmentation Problem:

The average hospital uses 16 different EHR systems across departments. A single patient encounter might generate data in:

- Core EHR (Epic, Cerner, etc.)

- Radiology PACS (picture archiving system)

- Laboratory information system

- Pharmacy system

- Billing system

- Specialized departmental systems (cardiology, oncology, etc.)

Each system speaks a different data dialect. Integrating them requires:

HL7 and FHIR Standards:

- HL7 v2: Legacy standard still widely used; message-based, difficult to parse

- HL7 v3: More structured but complex; limited adoption

- FHIR (Fast Healthcare Interoperability Resources): Modern REST API standard; growing adoption but not universal

Reality check: Even with standards, implementations vary wildly. “FHIR-compliant” systems often interpret the standard differently, requiring custom integration work.

Data Mapping and Normalization:

Different systems use different:

- Medical terminologies (ICD-10, SNOMED CT, LOINC, RxNorm)

- Units of measurement (metric vs. imperial)

- Date/time formats

- Missing data conventions

Building robust deep learning systems requires:

- Terminology mapping services (e.g., UMLS Metathesaurus)

- Unit conversion and normalization

- Standardized missing data handling

- Data quality checks to catch mapping errors

Real-Time vs. Batch Integration:

Some AI applications need real-time data:

- ICU monitoring systems

- Sepsis prediction

- Diagnostic decision support

Others can work with batch updates:

- Population health analytics

- Readmission risk scoring

- Research applications

Real-time integration is 10x more complex:

- Requires HL7 interface engines or FHIR APIs

- Must handle system downtime gracefully

- Needs low-latency data pipelines

- Demands robust error handling

Start with batch integration to prove value, then invest in real-time capabilities if needed.

The Data Warehouse Solution:

Most successful AI healthcare software implementations build on a clinical data warehouse:

- Centralized repository pulling data from all source systems

- Standardized data model (e.g., OMOP Common Data Model)

- Regular ETL processes to keep data current

- Data quality checks and validation rules

Building a clinical data warehouse is a 6-12 month project requiring:

- Data engineers familiar with healthcare data

- Clinical informaticists to validate data quality

- IT coordination with all source system owners

- Significant infrastructure investment

But it pays dividends: once built, the data warehouse supports all AI initiatives plus clinical research, quality improvement, and population health.

Clinician Adoption and Change Management

The best AI model in the world delivers zero value if clinicians ignore it.

Why Clinicians Resist AI:

Workflow Disruption: Physicians are overwhelmed. Adding another system to check—no matter how valuable—feels like one more burden.

Trust Deficit: Early clinical decision support systems cried wolf constantly, training clinicians to ignore alerts. AI systems inherit this skepticism.

Black Box Anxiety: Physicians are taught to understand their reasoning. “The AI says so” isn’t satisfying—especially when they’re legally liable for outcomes.

Job Threat Perception: Whether rational or not, some clinicians fear AI will replace them. Resistance becomes self-preservation.

Overcoming Resistance:

Involve Clinicians Early: Don’t build AI in isolation then spring it on users. Instead:

- Include clinicians on development teams

- Conduct user research to understand workflows

- Test prototypes with real users early and often

- Incorporate feedback before finalizing designs

Demonstrate Clear Value: Show, don’t tell:

- Pilot with early adopters who become champions

- Share success stories and outcome data

- Quantify time savings and quality improvements

- Make benefits tangible and personal

Integrate Seamlessly: Reduce friction to near-zero:

- Embed AI outputs in existing workflows

- Minimize clicks required to access insights

- Provide mobile access for on-the-go use

- Make AI optional initially—let value drive adoption

Build Trust Through Transparency: Help clinicians understand AI reasoning:

- Provide explanations for predictions (feature importance, similar cases)

- Show confidence levels—let clinicians know when AI is uncertain

- Enable feedback—let users mark predictions as helpful/unhelpful

- Share performance metrics—be transparent about accuracy

Address the Job Threat: Reframe AI as augmentation, not replacement:

- Emphasize how AI handles tedious work, freeing clinicians for patient interaction

- Highlight cases where AI catches things humans miss—frame as safety net

- Involve clinicians in quality oversight of AI systems

- Invest in training clinicians to work effectively with AI

Change Management Process:

Successful implementations follow structured change management:

- Stakeholder Analysis: Identify champions, resisters, and fence-sitters

- Communication Plan: Regular updates on progress, benefits, and timeline

- Training Program: Hands-on training before launch, ongoing support after

- Phased Rollout: Start with enthusiastic early adopters, expand gradually

- Feedback Mechanisms: Regular check-ins, surveys, and opportunities to voice concerns

- Continuous Improvement: Rapid iteration based on user feedback

Infrastructure and Computational Requirements

Deep learning is computationally expensive. Training large models requires specialized hardware; running inference at scale demands robust infrastructure.

Training Infrastructure:

GPU Requirements:

- Modern deep learning models require GPUs (Graphics Processing Units) or specialized AI accelerators

- Training a production medical imaging model: 4-8 high-end GPUs for 1-2 weeks

- Training large language models for clinical NLP: 16-64 GPUs for weeks to months

- Cost: $1.50-$3.00 per GPU-hour in cloud; $10,000-$15,000 per GPU for on-premise

Cloud vs. On-Premise:

Cloud training:

- Pros: Pay only for what you use, easy to scale, no hardware maintenance

- Cons: Ongoing costs, data transfer overhead, HIPAA compliance complexity

- Best for: Organizations without existing GPU infrastructure, variable workloads

On-premise training:

- Pros: No ongoing cloud costs, full data control, potentially better HIPAA compliance

- Cons: Large upfront investment, hardware becomes obsolete, requires specialized IT staff

- Best for: Organizations with consistent training workloads, strict data residency requirements

Inference Infrastructure:

Inference (using trained models to make predictions) is less computationally intensive than training but must happen in real-time for many applications.

Latency Requirements:

- Diagnostic decision support: <2 seconds

- Real-time monitoring alerts: <1 second

- Batch risk scoring: Minutes to hours acceptable

Scaling Considerations:

- How many predictions per second?

- What’s the acceptable latency?

- What happens if the system goes down?

Deployment Options:

Cloud-Based Inference:

- Serverless (AWS Lambda, Google Cloud Functions): Good for low-volume, sporadic use

- Container-based (Kubernetes): Better for high-volume, consistent load

- Managed ML platforms (SageMaker, Vertex AI): Easiest but potentially expensive

Edge Deployment:

- Running models on local servers or devices

- Required for low-latency applications or data residency requirements

- More complex deployment and maintenance

Hybrid Approaches:

- Cloud for training, on-premise for inference

- Edge for latency-critical predictions, cloud for batch analytics

- Balances performance, cost, and compliance needs

Cost Optimization:

Deep learning infrastructure can get expensive fast. Cost management strategies:

- Right-size compute: Don’t use expensive GPU instances for tasks that run fine on CPUs

- Spot instances: Use interruptible cloud instances for training (60-90% cost reduction)

- Model optimization: Quantization, pruning, and distillation reduce inference costs

- Batch processing: Group predictions to maximize GPU utilization

- Auto-scaling: Scale infrastructure up/down based on demand

Regulatory Approval Pathways (FDA, CE marking)

In the US, AI systems that diagnose, treat, or prevent disease are medical devices requiring FDA approval. In Europe, they need CE marking under the Medical Device Regulation (MDR).

FDA Software as a Medical Device (SaMD) Framework:

The FDA categorizes AI/ML-enabled medical devices by risk:

Class I (Low Risk):

- Minimal potential for harm

- Example: Administrative automation tools

- Pathway: Most are exempt from premarket review

Class II (Moderate Risk):

- Could cause temporary or reversible harm

- Example: Decision support tools, diagnostic aids

- Pathway: 510(k) clearance (demonstrate substantial equivalence to existing device)

Class III (High Risk):

- Could cause serious injury or death

- Example: Autonomous diagnostic systems, treatment planning

- Pathway: Premarket Approval (PMA) requiring clinical trials

The Predetermined Change Control Plan:

Traditional medical device regulation froze software at approval—any update required new approval. This doesn’t work for AI systems that improve with more data.

The FDA’s solution: Predetermined Change Control Plans allow pre-approved pathways for model updates:

- Define types of changes that are acceptable (e.g., retraining with new data)

- Specify performance monitoring and validation procedures

- Establish thresholds that trigger new FDA review

This enables continuous improvement while maintaining safety oversight.

CE Marking Under MDR:

Europe’s Medical Device Regulation (effective 2021) increased requirements for AI/ML devices:

- Clinical evaluation requirements strengthened

- Post-market surveillance mandatory

- Notified Body involvement for most devices

- Increased documentation requirements

Practical Approval Strategy:

For organizations developing AI healthcare software:

- Early FDA engagement: Pre-submission meetings to discuss regulatory pathway

- Quality management system: Implement ISO 13485 compliant QMS from day one

- Clinical validation: Plan prospective studies early—they take 12-24 months

- Documentation: Maintain detailed records of development, validation, and risk management

- Predicate device research: For 510(k) pathway, identify appropriate predicate devices

- Regulatory expertise: Hire consultants with AI healthcare software regulatory experience

Timeline expectations:

- 510(k) clearance: 6-12 months from submission

- PMA approval: 12-24+ months from submission

- CE marking: 6-18 months depending on class and Notified Body

Budget expectations:

- 510(k): $100,000-$300,000 in regulatory costs

- PMA: $500,000-$2,000,000+

- CE marking: €50,000-€200,000

ROI and Business Case for Deep Learning in Healthcare Software

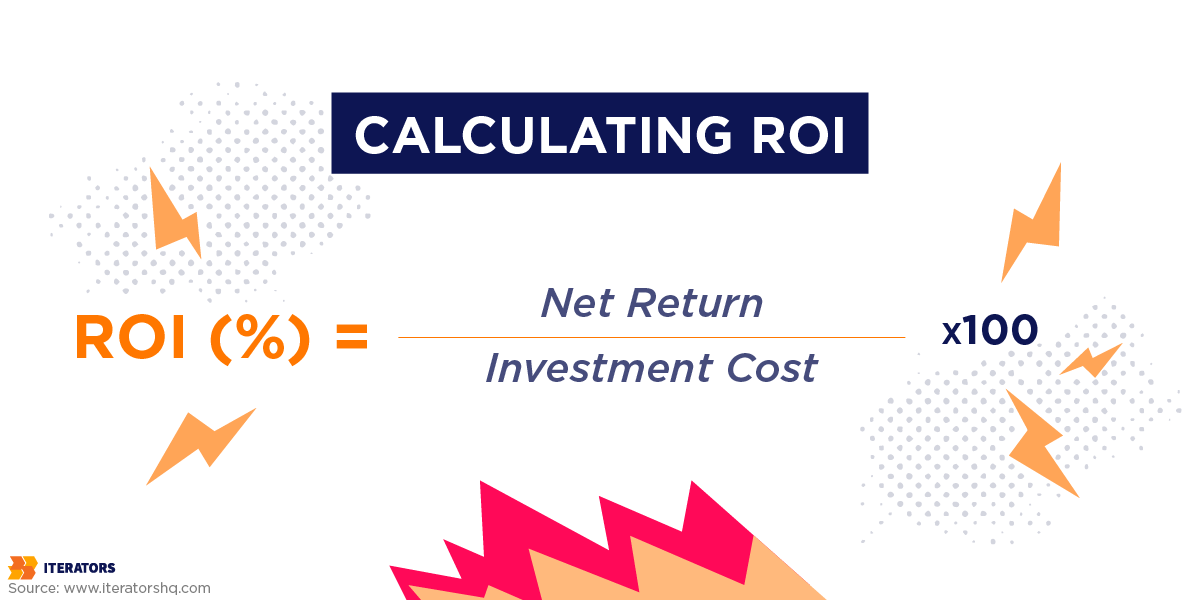

Every CTO evaluating deep learning in healthcare software eventually faces the question: ‘What’s the ROI? Here’s how to answer it with data instead of hand-waving.

Cost-Benefit Analysis for Deep Learning Applications in Predictive Healthcare

Healthcare software AI investments fall into three ROI categories:

1. Direct Cost Reduction

These are the easiest to quantify:

Labor Cost Savings:

- Automated coding: $50,000-$150,000 per year per hospital (reduced coding staff needs)

- Clinical documentation: 30-40% reduction in documentation time = $200,000+ per year (physician time value)

- Radiology workflow: 20-30% efficiency improvement = $300,000+ per year (increased throughput without additional radiologists)

Operational Efficiency:

- Reduced length of stay: $2,000-$5,000 per avoided hospital day

- Prevented readmissions: $15,000-$25,000 per avoided readmission

- Optimized resource utilization: 10-20% improvement in OR scheduling, bed management = $500,000-$2,000,000 per year

2. Revenue Enhancement

Harder to quantify but often larger:

Improved Coding Accuracy:

- Capture previously missed diagnoses and procedures

- Typical improvement: 2-5% increase in case mix index

- Revenue impact: $1-3 million per year for a 300-bed hospital

Expanded Service Capacity:

- Radiology AI enabling more scans per day

- Faster diagnosis enabling quicker patient throughput

- Typical impact: 15-25% capacity increase without capital investment

Quality Bonuses:

- Value-based payment programs reward quality metrics

- AI improving quality scores can unlock 1-3% bonus payments

- Impact: $500,000-$2,000,000 per year depending on size

3. Risk Reduction

The hardest to quantify but potentially most valuable:

Malpractice Risk:

- Missed diagnoses are a leading cause of malpractice claims

- AI safety nets reduce miss rate

- Value: Avoiding a single major malpractice case ($500,000-$5,000,000) justifies significant AI investment

Regulatory Penalties:

- Hospital readmission penalties: Up to 3% of Medicare payments

- Hospital-acquired condition penalties: Up to 1% of Medicare payments

- AI helping avoid penalties: $500,000-$3,000,000 per year

Reputational Risk:

- Medical errors damage reputation and patient volume

- AI improving safety protects brand value

- Difficult to quantify but strategically critical

Measurable Outcomes: Clinical and Financial

Clinical Outcomes:

Track these metrics to demonstrate clinical value:

Diagnostic Accuracy:

- Sensitivity/specificity improvements

- Reduction in missed findings

- Earlier disease detection (measured in days/weeks)

Patient Safety:

- Reduction in adverse events

- Medication error reduction

- Hospital-acquired infection reduction

Quality of Care:

- Adherence to evidence-based guidelines

- Preventive care completion rates

- Chronic disease management metrics

Patient Experience:

- Satisfaction scores

- Wait time reduction

- Communication quality improvement

Financial Outcomes:

Track these to demonstrate business value:

Direct Financial Impact:

- Revenue increase from improved coding

- Cost reduction from efficiency gains

- Penalty avoidance from quality improvement

Operational Metrics:

- Length of stay reduction

- Readmission rate reduction

- Resource utilization improvement

Productivity Metrics:

- Clinician time savings

- Administrative burden reduction

- Throughput increase

Strategic Metrics:

- Market share in key service lines

- Physician recruitment and retention

- Patient acquisition and retention

Timeline Expectations from Pilot to Production

Realistic timelines for healthcare software AI projects:

Phase 1: Discovery and Planning (2-3 months)

- Use case identification and prioritization

- Data availability and quality assessment

- Stakeholder alignment and buy-in

- Regulatory pathway determination

- Vendor selection or build/buy decision

Phase 2: Development and Validation (6-12 months)

- Data collection and labeling

- Model development and training

- Internal validation

- External validation (if multi-site)

- Integration planning

Phase 3: Pilot Deployment (3-6 months)

- Limited deployment to small user group

- Workflow integration testing

- User training and feedback collection

- Performance monitoring

- Iteration based on feedback

Phase 4: Regulatory Approval (6-12 months, if required)

- FDA submission preparation

- Clinical validation studies

- Review and approval process

- Post-market surveillance planning

Phase 5: Production Rollout (6-12 months)

- Phased expansion to full user base

- Change management and training at scale

- Performance monitoring and optimization

- Continuous improvement processes

Total Timeline: 18-36 months from initial concept to full production deployment for complex systems requiring regulatory approval. Simpler systems without regulatory requirements can move faster (12-18 months).

When to Expect ROI:

- Quick wins: Operational efficiency improvements often show ROI within 6-12 months of production deployment

- Medium-term: Quality improvement and revenue enhancement typically take 12-24 months to fully materialize

- Long-term: Strategic benefits (market position, reputation) accrue over 2-5 years

Investment Levels:

Typical investment ranges for healthcare software AI projects:

Small-Scale Pilot:

- Scope: Single use case, single department, 50-100 users

- Investment: $100,000-$300,000

- Timeline: 6-12 months

- ROI potential: $200,000-$500,000 annually

Medium-Scale Deployment:

- Scope: Multiple use cases or enterprise-wide single use case

- Investment: $500,000-$2,000,000

- Timeline: 12-24 months

- ROI potential: $1,000,000-$5,000,000 annually

Large-Scale Transformation:

- Scope: Multiple use cases across enterprise, custom development

- Investment: $2,000,000-$10,000,000+

- Timeline: 24-36 months

- ROI potential: $5,000,000-$20,000,000+ annually

Build vs. Buy Economics:

Buying Commercial Solutions:

- Lower upfront cost

- Faster deployment

- Less technical risk

- Ongoing licensing fees

- Less customization

- Vendor lock-in risk

Building Custom Solutions:

- Higher upfront cost

- Longer development time

- More technical risk

- No ongoing licensing fees

- Full customization

- Complete control and IP ownership

Hybrid Approach (Often Optimal):

- Use commercial solutions for commoditized capabilities (e.g., general radiology AI)

- Build custom solutions for differentiating capabilities (e.g., proprietary clinical workflows)

- Balance speed, cost, and strategic control

Choosing the Right Technology Stack for Deep Learning in Healthcare Software

Technology choices have long-term consequences. Choose poorly and you’ll be rewriting everything in 18 months. Choose wisely and you’ll build on a foundation that scales.

Framework Comparison

The deep learning framework landscape has consolidated around two primary options:

TensorFlow (Google)

Strengths:

- Production deployment tools (TensorFlow Serving, TensorFlow Lite)

- Strong mobile/edge deployment support

- Excellent documentation and tutorials

- Large community and ecosystem

- TensorFlow Extended (TFX) for production ML pipelines

Weaknesses:

- Steeper learning curve

- More verbose code

- Less intuitive debugging

- Slower research iteration

Best for:

- Production deployments requiring robust serving infrastructure

- Mobile/edge deployment scenarios

- Organizations with strong DevOps culture

- Projects requiring extensive production monitoring

PyTorch (Facebook/Meta)

Strengths:

- Intuitive, Pythonic API

- Excellent debugging experience

- Faster research iteration

- Strong academic adoption

- Growing production ecosystem (TorchServe)

Weaknesses:

- Historically weaker production tools (improving rapidly)

- Smaller ecosystem than TensorFlow

- Less mature mobile/edge support

Best for:

- Research and experimentation

- Rapid prototyping

- Organizations prioritizing developer experience

- Projects requiring custom architectures

The Reality: Both Work Fine

The TensorFlow vs. PyTorch debate generates more heat than light. Both frameworks are mature, well-supported, and capable of production deployment. Choose based on:

- Your team’s existing expertise

- Specific deployment requirements

- Available third-party tools and models

Other Frameworks:

JAX (Google):

- Strengths: Extremely fast, excellent for research, automatic differentiation

- Weaknesses: Smaller ecosystem, steeper learning curve

- Best for: Cutting-edge research, performance-critical applications

MXNet (Apache):

- Strengths: Efficient, good for distributed training

- Weaknesses: Smaller community, less momentum

- Best for: AWS deployments (powers AWS SageMaker)

ONNX (Cross-Framework Standard):

- Not a framework but an interchange format

- Allows training in one framework, deploying in another

- Reduces vendor lock-in risk

Cloud vs. On-Premise Considerations

Cloud Platforms:

AWS (Amazon Web Services):

- Strengths: Broadest service portfolio, mature ML tools (SageMaker), strong HIPAA compliance

- Weaknesses: Complex pricing, steeper learning curve

- Best for: Organizations already on AWS, need for diverse services

Google Cloud Platform:

- Strengths: Best AI/ML tools (Vertex AI), TensorFlow integration, strong data analytics

- Weaknesses: Smaller healthcare customer base, fewer compliance certifications

- Best for: Organizations prioritizing ML capabilities, TensorFlow users

Microsoft Azure:

- Strengths: Strong healthcare presence, excellent compliance, good enterprise integration

- Weaknesses: ML tools less mature than AWS/GCP

- Best for: Organizations using Microsoft ecosystem, strong compliance needs

Cloud Benefits:

- Pay-as-you-go pricing

- Elastic scaling

- Managed services reduce operational burden

- Access to latest hardware (GPUs, TPUs)

- Geographic redundancy

Cloud Challenges:

- Ongoing costs can exceed on-premise over time

- Data transfer costs

- Vendor lock-in

- HIPAA compliance complexity

- Latency for real-time applications

On-Premise Infrastructure:

Benefits:

- Full data control

- No data transfer costs

- Potentially better HIPAA compliance

- Predictable costs after initial investment

- No vendor lock-in

Challenges:

- Large upfront capital investment

- Hardware obsolescence (3-5 year refresh cycle)

- Requires specialized IT staff

- Limited scaling flexibility

- Disaster recovery complexity

Hybrid Approaches:

Many organizations adopt hybrid models:

- Cloud for training (elastic compute needs)

- On-premise for inference (data residency, latency)

- Cloud for development, on-premise for production

- Multi-cloud for redundancy and vendor diversity

Integration with Existing EHR/EMR Systems

The technical challenge isn’t building AI models—it’s integrating them into clinical workflows.

Integration Patterns:

1. Standalone Application:

- Separate application clinicians access independently

- Pros: Easier to build, fewer integration dependencies

- Cons: Workflow disruption, low adoption, duplicate data entry

2. EHR-Embedded:

- AI functionality built directly into EHR interface

- Pros: Seamless workflow, high adoption

- Cons: Requires deep EHR integration, vendor cooperation

3. Clinical Decision Support (CDS) Hooks:

- Standardized integration points in EHR workflows

- Pros: Standards-based, works across EHR vendors

- Cons: Limited EHR support, complex implementation

4. API Integration:

- AI system exposes APIs consumed by EHR

- Pros: Flexible, supports multiple integration points

- Cons: Requires EHR customization, ongoing maintenance

EHR Vendor Considerations:

Epic:

- Market leader (30%+ of US hospitals)

- App Orchard marketplace for third-party apps

- FHIR APIs available

- Deep integration requires vendor relationship

Cerner:

- Second largest (25%+ market share)

- Code marketplace for third-party apps

- FHIR APIs available

- Integration varies by Cerner version

Smaller Vendors:

- Often more flexible for custom integration

- May lack robust APIs

- Integration quality varies widely

Integration Strategy:

- Start with read-only integration: Pull data from EHR for AI processing

- Validate data quality: Ensure data extracted correctly and completely

- Build standalone UI initially: Prove value before deep EHR integration

- Add write-back capabilities: Push AI insights back into EHR

- Pursue embedded integration: Work toward seamless EHR experience

Technical Requirements:

- Authentication: Single sign-on (SAML, OAuth)

- Authorization: Role-based access matching EHR permissions

- Data synchronization: Real-time or near-real-time data updates

- Audit logging: Track all data access and AI predictions

- Error handling: Graceful degradation when EHR unavailable

Getting Started: A Roadmap for Healthcare Organizations

Theory is useless without a practical path forward. Here’s exactly how to begin.

Step 1: Identify High-Value Use Cases

Not all deep learning in healthcare software is created equal. Start with use cases that have:

Clear Clinical Impact:

- Addresses a significant patient safety or quality issue

- Improves outcomes for high-volume conditions

- Reduces preventable adverse events

Strong Economic Case:

- Measurable cost savings or revenue enhancement

- ROI achievable within 12-24 months

- Aligns with organizational strategic priorities

Technical Feasibility:

- Required data is available and accessible

- Problem is well-suited to AI approaches

- Regulatory pathway is clear

Organizational Readiness:

- Clinical champions willing to drive adoption

- Leadership support and resources

- Workflow integration is practical

Use Case Prioritization Framework:

| Criterion | Weight | Scoring (1-5) |

|---|---|---|

| Clinical impact | 25% | Lives saved/improved |

| Economic value | 25% | ROI potential |

| Technical feasibility | 20% | Data availability, problem fit |

| Adoption likelihood | 15% | Workflow integration, user buy-in |

| Strategic alignment | 15% | Organizational priorities |

Score each potential use case, multiply by weights, and prioritize highest-scoring opportunities.

Common High-Value Starting Points:

- Sepsis prediction (high mortality, clear intervention, strong ROI)

- Readmission risk (financial penalties, measurable outcomes)

- Radiology workflow optimization (efficiency gains, quality improvement)

- Clinical documentation (physician time savings, revenue capture)

- Medication safety (adverse event reduction, liability mitigation)

Step 2: Assess Data Readiness

Deep learning in healthcare software is only as good as the data they learn from. Assess:

Data Availability:

- Does the required data exist in electronic form?

- Is it accessible for AI development?

- What’s the data volume? (Need thousands to millions of examples)

Data Quality:

- Completeness: What percentage of records have all required fields?

- Accuracy: How often is data incorrect or outdated?

- Consistency: Is data coded consistently over time?

Data Labeling:

- Is ground truth available? (Diagnoses confirmed, outcomes documented)

- Who can provide expert labels if needed?

- What’s the cost and timeline for labeling?

Data Governance:

- Who owns the data?

- What are the legal/regulatory constraints?

- What approvals are needed for AI use?

Data Readiness Assessment:

Level 1 (Not Ready):

- Data mostly on paper or in incompatible systems

- Significant quality issues

- No clear data governance

- Action: Invest in data infrastructure before AI

Level 2 (Partially Ready):

- Data electronic but fragmented

- Quality issues manageable

- Basic governance in place

- Action: Data integration project before AI

Level 3 (Ready):

- Data in accessible electronic systems

- Acceptable quality

- Clear governance and approvals

- Action: Proceed with AI development

Level 4 (Highly Ready):

- Integrated data warehouse

- High quality, well-documented

- Mature governance processes

- Action: Multiple AI initiatives feasible

Step 3: Build vs. Buy vs. Partner Decision

Build (Custom Development):

When to Build:

- Use case is unique to your organization

- Differentiating capability you want to own

- Existing solutions don’t meet requirements

- You have strong technical team

Pros:

- Full customization

- IP ownership

- No vendor lock-in

- Complete control

Cons:

- Highest cost and risk

- Longest timeline

- Requires specialized expertise

- Ongoing maintenance burden

Buy (Commercial Solution):

When to Buy:

- Commoditized use case (many vendors offer solutions)