Hackers are already using artificial intelligence (AI) to advance their attacks, so why shouldn’t cybersecurity fight back with AI? In 2024 alone, organizations experienced a 30 percent year-over-year increase in weekly cyberattacks. The growing number of AI-driven threats shows that cybersecurity experts must adopt new solutions – especially Generative AI in Cybersecurity – because conventional methods cannot match their speed and adaptability.

Generative AI in cybersecurity improves threat detection, automates responses, and predicts future attacks. It also boosts efficiency by reducing manual work and improving response times.

Although AI has immense potential in cybersecurity, its application introduces major concerns, especially with data privacy and ethical use. To use this technology efficiently, organizations must understand how generative AI works and how to integrate it into existing security frameworks. This guide covers everything you need to know before using AI for cybersecurity.

What is Generative AI

Generative AI (GenAI) is a type of artificial intelligence that produces fresh content including text and images, code, and audio based on patterns it has learned from existing data. This type of AI is known for its ability to mimic human creativity, but it’s only as creative as its ability to learn.

Popular GenAI Models including GPT (for text generation) and Stable Diffusion (for images) depend on complex machine learning methods such as transformer architectures and adversarial networks. These models produce realistic responses and conversations. They are also used for generating synthetic data for multiple uses.

In cybersecurity, for example, GenAI can help by generating simulated attack scenarios to test the strength of a network’s defenses. It can also create synthetic data to train security systems, helping them detect vulnerabilities or suspicious patterns before they become threats.

How Does it Work

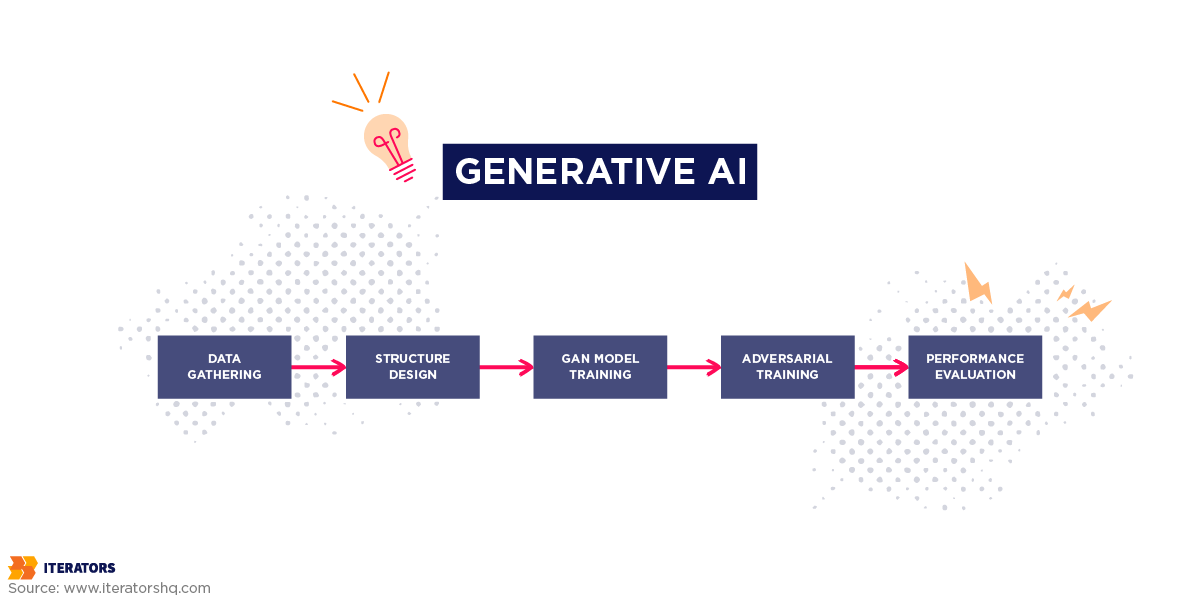

Generative AI works like a sophisticated artist who studies extensive datasets to produce new creations. Here’s how it works in simple terms:

1. Data Collection – The AI is fed large datasets, such as books, images, or code, to learn from real-world examples.

2. AI Model Training – The AI modifies its internal parameters throughout training sessions to detect patterns. It improves content quality by learning through trial and error.

3. Identifying Patterns – The AI looks for relationships within the data, such as how words connect in sentences or how colors blend in images. Different models specialize in different tasks:

- GANs (Generative Adversarial Networks) improve generated outputs through a two-part system where one component generates data and another assesses its quality.

- VAEs (Variational Autoencoders) regenerate datasets and preserve important variations.

- Transformers predict words in a sentence to ensure fluent text.

- RNNs (Recurrent Neural Networks) analyze sequential data including musical or time-based data.

4. Data Generation – After learning, the AI creates new content by combining the patterns it has discovered.

5. Iterative Training and Feedback – The AI system continually gets better through a self-checking process where it analyzes its output against real data and implements necessary changes.

For example, in cybersecurity, transformers can be trained to generate phishing email variations for defensive testing. GANs might simulate malware behaviors, allowing analysts to test detection tools. VAEs can recreate anonymized network traffic patterns to safely train intrusion detection systems.

How is Generative AI Impacting Cybersecurity

So, how can generative AI be used in cybersecurity? Here are some of its core applications:

1. Threat Detection & Anomaly Identification

Generative AI boosts cybersecurity capabilities through real-time detection of threats and anomalies. Traditional security systems depend on pre-established rules that are unable to detect emerging or changing threats. However generative AI learns from network activity and detects unusual patterns that might suggest a cyberattack.

The system identifies potential security breaches by analyzing extensive datasets to differentiate them from normal behavior patterns. For example, the AI system can activate alerts whenever it detects an employee logging in from an unknown location or device. Research has shown that on average, AI systems can track and contain data breaches 108 days faster than organizations without AI tools.

2. Automated Incident Response

Traditional security teams struggle to respond quickly because they experience alert fatigue from too many security threats. AI-driven systems evaluate threats and calculate their severity to trigger immediate responses without human input. For example, if AI identifies an unauthorized access attempt, it responds by blocking the user from the system, revoking their credentials, or isolating any compromised systems.

Walmart introduced AI Detect and Respond (AIDR), an AI-powered anomaly detection system for real-time business and system health monitoring. Throughout its three-month validation period, the AIDR system delivered predictions from more than 3,000 models to different operational units. It detected 63 percent of major incidents and decreased detection time by over seven minutes.

3. Phishing & Fraud Detection

Cybercriminals are getting smarter. They’re using AI to create ultra-realistic phishing emails and deepfake impersonations. This results in automated scams that can fool even the most cautious users. So, how do you fight AI with AI?

Organizations are rolling out AI-powered detection systems. These tools analyze massive datasets of real and fraudulent messages, learning to spot the subtle differences between the two. They can pick up on red flags that humans might miss, whether it’s odd writing styles, strange language patterns, or sender details that don’t quite add up.

4. Malware Analysis & Prevention

Malware is evolving, but so is AI. Generative AI now plays a huge role in spotting malicious code and predicting cyber threats before they strike. AI trains on massive datasets of known malware and safe software. Over time, it learns to tell the difference. Even better, it can detect brand-new malware variants just by recognizing patterns similar to existing threats. This means better security and fewer surprises.

Take iVerify, for example. They’ve launched a Mobile Threat Hunting tool that uses machine learning to sniff out spyware on iOS and Android. By analyzing shutdown, diagnostic, and crash logs, it has uncovered serious infections—like Pegasus spyware—targeting political activists and officials.

5. Security Code Generation & Testing

Writing secure code is hard. But it’s even harder to catch security flaws before they become disasters. Generative AI plays a crucial role in software development, helping software engineers and developers identify vulnerabilities and improve security before deployment. It scans codebases, flags vulnerabilities, and even suggests fixes, all before deployment. It’s like having a built-in security expert reviewing every line of code.

Think of common threats like buffer overflows or injection attacks—AI can spot these weak spots early. Even better, it can recommend safer coding practices to keep hackers out. AI can also generate synthetic datasets and simulate attack scenarios, and stress-testing software before it ever goes live. These AI tools are so efficient that 76 percent of developers use them to test their code.

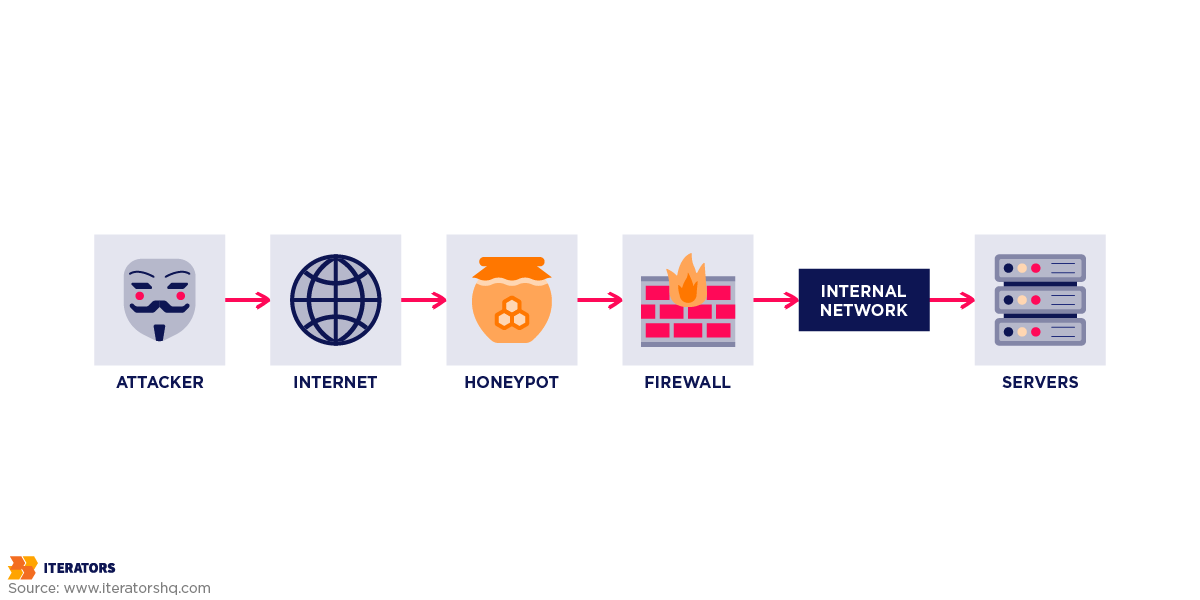

6. Deception Technology (AI-Generated Honeypots)

Ever heard the saying, “Fight fire with fire”? That’s exactly what AI is doing with deception technology. Honeypots—decoy systems designed to lure cyber attackers—aren’t new. But traditional ones often lack realism, making them easy for hackers to spot. Fortunately, AI-generated honeypots closely mimic real systems, tricking even the most advanced adversaries.

Two key examples of this technology in action are LLM Agent Honeypot by Apart Research and DECEIVE by Splunk. The LLM Honeypot is a simulated vulnerable server with embedded prompt injections, baiting attackers into revealing their tactics. DECEIVE on the other hand is a next-level honeypot that uses AI to dynamically simulate entire Linux servers via SSH. This keeps cybercriminals guessing. With this approach, hackers take the bait, security teams gain intel, and real systems stay protected.

7. Cyber Threat Intelligence Analysis

Generative AI significantly enhances cyber threat intelligence analysis by automating the collection, processing, and interpretation of vast amounts of threat data. Traditional methods drown in the flood of data from dark web forums, social media, and threat databases. AI, on the other hand, cuts through the noise—spotting patterns, flagging risks, and predicting the next big attack.

One key example of AI-driven cyber threat intelligence analysis is the IBM X-Force. This platform scans global threat feeds, tracks malicious actors, and forecasts attack trends. It helps organizations to identify threats before they strike and block suspicious activity before it causes damage.

8. Deepfake Detection

Seeing is believing, right? Not anymore. Deepfakes are blurring the line between real and fake, making misinformation easier to spread. Luckily, AI isn’t just the problem—it’s also the solution. Deepfake detection tools analyze subtle inconsistencies in videos, audio, and images. Cutting-edge models like vision transformers, CNNs, and ensemble methods scan for telltale signs of fakery. Things like unnatural eye movements, strange lighting, or odd speech patterns. These red flags help AI separate real content from manipulated media.

Several startups are also making strides in this field. DuckDuckGoose for example, is an AI-powered detection tool specializing in face analysis and pattern recognition. It achieves a 96 percent accuracy rate in identifying manipulated videos.

What are the Benefits of Using Generative AI in Cybersecurity

The amazing capabilities of AI-driven cybersecurity translate into the following benefits:

1. Efficiency

Cybersecurity is a race against time. Every second counts. Thankfully, generative AI automates routine tasks—data analysis, threat detection, and anomaly tracking—so security teams can focus on the bigger picture instead of drowning in alerts.

And the best part is that AI doesn’t just work faster, it works smarter. It scans massive datasets in seconds, pinpointing threats that would take humans hours to detect. One study found that after adopting generative AI in cybersecurity, companies cut security incident resolution time by 30.13 percent.

2. Scalability

Cyber threats are multiplying at breakneck speed, and traditional security cannot keep up. Generative AI, however, scales effortlessly. Whether it’s a startup or a global enterprise, AI can handle massive workloads without breaking a sweat. It delivers strong, consistent protection, no matter how fast an organization expands.

Consider this: Amazon faced 750 million cyber threat attempts daily, a sevenfold increase in just a year. AI is fueling the surge in attacks—but ironically, it’s also the only way to fight back. Amazon, like many others, is turning to AI-powered threat detection to keep up.

3. Cost Efficiency

Cybersecurity isn’t cheap—but breaches are even more expensive. That’s why Generative AI is a game-changer. By automating threat detection and response, it cuts down on manual labor and operational costs. No more endless hours spent analyzing logs or chasing false alarms. AI handles the heavy lifting, so security teams can focus on what really matters.

According to IBM’s Cost of Data Breach Report, companies that use AI-driven security measures save an average of $1.76 million per breach. That’s a 39.3 percent cost reduction compared to organizations that rely on traditional methods.

4. Continuous Learning

Hackers get smarter every day. AI makes sure your defenses do, too. Unlike static security systems, generative AI learns in real-time. It constantly analyzes new attack patterns, adapts to emerging threats, and improves its defenses. Think of it as a security system that never sleeps and never stops improving.

A key advantage of Generative AI is its ability to continuously learn and adapt to new threats. By analyzing emerging attack patterns and incorporating real-time data, AI systems enhance their predictive capabilities, staying ahead of cyber adversaries.

How to Implement AI in Cybersecurity

The integration of AI and cybersecurity requires a strategic approach. Here are some steps to follow:

1. Assess Organizational Cybersecurity Needs

Before integrating AI, you need a clear picture of what you’re protecting and where your vulnerabilities lie. Start by cataloging all critical assets—hardware, software, databases, and sensitive data. This step ensures your security measures are targeted and effective.

Next, analyze your current cybersecurity protocols to make sure your existing defenses keep up with modern threats. Look at your technologies, processes, and personnel to identify gaps that AI can fill. Some common weak points include slow threat detection, high false positives, and inefficient incident response.

But AI adoption shouldn’t be random—it must align with your business objectives and risk tolerance. A well-integrated AI system should support your organization’s resilience strategy, not disrupt it. To structure your approach, consider frameworks like the MITRE AI Maturity Model. This tool helps organizations assess their readiness for AI adoption and provides a step-by-step guide to successful implementation.

And don’t forget to involve key stakeholders—IT teams, security analysts, compliance officers, and executives. Their input ensures that AI adoption addresses real security needs while staying aligned with overall business goals. Once you have a solid assessment, the next step is choosing the right AI tools and integrating them into your cybersecurity framework.

2. Select Appropriate AI Technologies

Now that you know what your organization needs, it’s time to choose the right AI tools to tackle your cybersecurity challenges. But with so many options out there, where do you start? Your first decision should be about where your AI security solution lives. Depending on security needs, you may choose between cloud-based solutions, on-premise solutions, or hybrid models.

Cloud-based AI solutions are scalable, cost-effective, and continuously updated by vendors like Darktrace, CrowdStrike, and Palo Alto Networks. They are ideal if you want automated updates and minimal infrastructure management. On-premise options offer greater control over sensitive data, making them a go-to for compliance-heavy industries like finance and healthcare. Companies like Iterators specialize in implementing these solutions. Hybrid models give the best of both worlds—the flexibility of cloud solutions and the resilience of on-prem tools.

Before committing, check if your existing security stack plays well with AI-powered platforms. Look at:

- Firewalls

- SIEM tools (Security Information and Event Management)

- Endpoint Detection and Response (EDR) systems

- Cloud security solutions

Can they integrate seamlessly with AI via APIs or direct connections? If not, you may face costly workarounds later.

Not all AI security tools are created equal, so, do your homework. Look for proven success by checking case studies, reviews, and independent testing results. Ask for live demos so you get a feel of how well the tool can detect threats or differentiate real threats from false positives.

Consider the total cost of each option. This includes the implementation, maintenance, and training expenses—not just the sticker price. And make sure your preferred vendor offers plans with higher bandwidth in case of future expansion. Privacy regulations are important too, so make sure each tool complies with GDPR, CCPA, HIPAA, or frameworks peculiar to your industry.

3. Integrate AI Solutions into Existing Cybersecurity Infrastructure

So, you’ve chosen your AI-powered security tools. Now what? System integrations are where the real magic happens, but only if done right. A sloppy rollout can lead to false positives, performance issues, or worse, gaps in security. AI-driven cybersecurity tools need to work in sync with your existing defenses. That means setting up secure, encrypted data flows between AI systems and network logs, endpoint activities, SIEM tools, and more.

Never roll out AI tools across your entire organization on Day 1. Instead, run pilot tests in a sandbox environment first. While you run tests:

- Monitor AI’s ability to detect threats: Is it spotting real dangers or flagging everything as suspicious?

- Evaluate its impact on network performance: It shouldn’t slow things down.

- Analyze false positive rates: if it gives too many alerts, you might need to tweak detection parameters.

- Assess security team workflows: Does AI make their job easier or add unnecessary noise?

With smart integration, AI becomes a force multiplier, enhancing your defenses, not complicating them. Once you’re confident the AI is accurate and efficient, scale it up step by step, starting with the most critical functionalities.

4. Monitor and Evaluate AI Performance Continuously

So, your AI is up and running but it doesn’t end there. You still have to keep it sharp because cyber threats evolve constantly, and if your AI isn’t learning, it’s falling behind. But how do you know if your AI is actually improving security? By tracking key performance indicators (KPIs) such as:

- Threat Detection Accuracy – Percentage of actual threats correctly identified.

- False Positive and False Negative Rates – AI should minimize false alerts while ensuring real threats are not overlooked.

- Response Time – How quickly AI detects and responds to incidents.

- Scalability and System Impact – Evaluate whether AI slows down operations or consumes excessive resources.

If any of these metrics look off, it’s time for adjustments.

AI learns from new data, but it requires manual fine-tuning to remain effective. Regularly update models with new threat intelligence, retrain algorithms to reduce bias, and adapt AI’s detection rules based on emerging cyberattack patterns. Regular audits will help you assess AI performance against security benchmarks. Conduct penetration testing by simulating attacks to see how AI responds. Organize red team vs. blue team exercises to challenge AI’s ability to detect sophisticated threats.

5. Provide Ongoing Training for Security Personnel

AI can detect threats, automate responses, and analyze massive data sets in seconds. But it still needs human oversight. Security teams must know how to work with AI, validate its findings, and step in when things get tricky. That’s why ongoing employee training is crucial.

Not everyone on your development team needs the same training. Security analysts may need to learn how to interpret AI-generated alerts and distinguish between real threats and false positives. Incident response teams may require hands-on experience using AI-driven containment and mitigation strategies. During these training sessions, security analysts and IT teams can leverage team development software to collaborate. Take advantage of cyber range platforms like Cyberbit, Immersive Labs, and RangeForce. They provide interactive, real-world simulations that help teams refine their response skills in high-stakes scenarios.

Cyber threats evolve daily, and so should your team’s knowledge. Regular training sessions keep them ahead of attackers. Organizing quarterly AI and cybersecurity workshops ensures that security professionals stay updated on the latest threats and AI advancements.

Threat intelligence briefings also help employees recognize new AI-driven attack strategies before they become widespread. Finally, consider partnering with AI vendors for expert-led workshops that provide deeper insights into AI’s evolving role in cybersecurity.

AI Cybersecurity Implementation Roadmap

Here’s a high-level, five-phase roadmap to guide your AI-cybersecurity journey. Feel free to adjust timelines to fit your organization’s pace and priorities:

| Phase | Timeline | Key Actions | Deliverables |

| 1. Assess Needs | Weeks 1–2 | Catalog critical assets (hardware, software, data).Audit existing defenses: tech, processes, people.Run gap analysis against modern threats.Stakeholder workshop: align on business goals & risk appetite.Use MITRE AI Maturity Model to benchmark readiness. | Asset inventory & vulnerability reportAI-readiness scorecardStakeholder alignment deck |

| 2. Select Technologies | Weeks 3–5 | Decide on deployment model: cloud, on-prem, or hybrid.Shortlist vendors (Darktrace, CrowdStrike, etc.).Check API-compatibility with firewalls, SIEM, EDR, cloud security.Demo tools; evaluate detection accuracy vs. false positives.Total cost analysis (implementation + maintenance + training).Compliance check (GDPR, CCPA, HIPAA). | Vendor comparison matrixProof-of-concept plan & budgetCompliance checklist |

| 3. Integrate & Pilot | Weeks 6–9 | Stand up sandbox environments.Wire up secure, encrypted data feeds (logs, endpoints, SIEM).Run controlled pilot: monitor detection rates, performance impact, alert volume.Tweak thresholds & model parameters.Gather feedback from security teams on workflows & noise. | Pilot test report (KPIs: detection accuracy, false positives, latency)Integration playbook |

| 4. Scale & Tune | Weeks 10–12 | Roll out AI modules in production, starting with highest-risk assets.Automate incident response playbooks.Schedule periodic retraining with fresh threat intel.Audit with red-team exercises & penetration tests. | Production rollout planAutomated response scriptsAudit & pen-test findings |

| 5. Train & Evolve | Ongoing (Q2+) | Quarterly workshops on AI-driven threat hunting.Hands-on drills via Cyberbit/Immersive Labs.Threat-intelligence briefings for new attack patterns.Partner vendor-led deep dives. | Training curriculum & calendarSkills-assessment reportsUpdated AI model versions |

Challenges of Generative AI in Cybersecurity (and How to Overcome Them)

While Generative AI enhances cybersecurity defenses, it also introduces new challenges that can undermine its effectiveness:

1. Adversarial Attacks on AI Models

AI-driven security systems can be deceived through adversarial attacks—subtle manipulations that trick AI into making incorrect decisions. Cybercriminals can modify network traffic patterns, tweak malware signatures, or inject poisoned data into training sets to fool AI models. Some attackers even generate adversarial malware samples designed to mimic legitimate software, bypassing AI-based detection.

To counter these risks, organizations must employ adversarial training techniques, where AI models are exposed to manipulated inputs during training to improve their resilience. Anomaly detection systems can help spot irregularities that AI might overlook. Using ensemble models—where multiple AI systems work together—also makes it more difficult for attackers to bypass detection, as deceiving one model doesn’t necessarily fool them all.

2. False Positives and False Negatives

AI-driven cybersecurity systems rely on pattern recognition, but they are prone to false positives (incorrectly identifying safe activity as a threat) and false negatives (failing to detect actual threats). Too many false positives overwhelm security teams, causing alert fatigue and slowing response times. Meanwhile, false negatives allow genuine threats to slip through undetected, increasing the risk of data breaches. Generative AI models, if not properly fine-tuned, may misclassify emerging threats or struggle with zero-day attacks due to insufficient training data.

To reduce these risks, organizations must continuously refine AI models and adopt multi-layered security strategies. A hybrid approach—combining AI-driven detection with traditional rule-based systems—improves accuracy. Human oversight is also crucial. Security analysts should review AI-generated alerts before executing automated responses. Implementing feedback loops where AI learns from past misclassifications helps models become more precise over time.

3. High Implementation and Maintenance Costs

Deploying generative AI in cyber security requires significant financial and technical investment. The initial setup involves purchasing high-performance computing infrastructure, acquiring specialized AI software, and integrating AI with existing security tools. Additionally, AI models require continuous monitoring, fine-tuning, and retraining to remain effective against evolving cyber threats. Maintenance costs include hiring skilled personnel, conducting regular updates, and addressing security vulnerabilities. Smaller organizations may struggle with these expenses, making AI-driven security more accessible to large enterprises.

Organizations can manage AI implementation costs by adopting cloud-based AI security solutions, which eliminate the need for expensive on-premise infrastructure. They should also try open-source AI frameworks, such as TensorFlow and PyTorch. These provide cost-effective alternatives for developing AI cybersecurity tools. Additionally, companies can implement incremental AI adoption, starting with smaller pilot projects before scaling up.

4. Need for Continuous Model Training and Updates

AI-driven cybersecurity systems are only as effective as the data they learn from. That’s why it’s essential to use real-world data in development projects to enhance model accuracy. Cyber threats evolve rapidly, requiring AI models to be continuously updated to detect new attack patterns, malware variants, and phishing tactics. Without regular retraining, AI models become outdated, leading to reduced detection accuracy and a higher risk of cyberattacks.

Retraining AI involves ingesting fresh threat intelligence, refining detection algorithms, and validating performance against emerging threats. This process, however, demands significant computational power, skilled personnel, and real-time access to threat data. To streamline model updates, organizations can automate retraining using live threat intelligence feeds. Leveraging federated learning can also improve AI models while preserving data privacy, allowing them to train on decentralized data sources without exposing sensitive information.

5. Computational and Resource Demands

Generative AI is a beast when it comes to computing power. It chews through massive amounts of cybersecurity data, demanding hefty storage, energy, and high-speed processing. Running AI-driven threat detection and response systems involves real-time analysis of network traffic and endpoint logs which can be resource-intensive

To keep things running smoothly, organizations need high-performance GPUs, scalable cloud infrastructure, and AI frameworks built for speed. Companies with limited IT muscle should lean on cloud-based AI services from AWS, Google Cloud, or Microsoft Azure. These platforms scale on demand—no need for expensive on-prem hardware. And if efficiency is the goal, model compression techniques like pruning and quantization can cut down computational loads without sacrificing accuracy.

6. Integration Challenges with Legacy Systems

Legacy systems and AI don’t always play nice. Many organizations still rely on outdated cybersecurity infrastructure—systems built long before AI was even on the radar. The problem with these old setups is they often lack APIs, real-time data processing, or cloud connectivity, making AI integration a serious headache. Upgrading isn’t just a simple patch job. It takes a major investment in software modernization, middleware solutions, and data migration. And here’s another issue—AI thrives on structured, high-quality data. However, older security tools might store information in incompatible formats or fail to provide real-time insights.

So, what’s the fix? Organizations should use API gateways and middleware solutions to act as a bridge between old and new tech. Successful AI implementation requires a structured project management approach. Gradual adoption is key. Start with modular implementations. Take small, manageable steps instead of ripping everything out at once. That way, AI can be phased in without throwing security operations into chaos.

7. Ethical Concerns and Potential Misuse

Generative AI in cybersecurity isn’t just a tool, it’s a double-edged sword. While it strengthens defenses, it also raises ethical red flags. These include privacy risks and bias in threat detection. And let’s not forget cybercriminals—they’re already using AI to craft deepfake phishing emails, automate hacking, and generate malware that’s harder to spot. Artificial intelligence cyber security tools could also expose sensitive data, leading to compliance nightmares. Even worse, biased AI models might misidentify threats, unfairly flagging certain users or behaviors.

So, what’s the solution? Organizations need strict ethical guidelines, transparent AI decision-making, and adversarial testing to catch flaws before attackers do. But they can’t do it alone. Cybersecurity pros and regulators must team up to build AI governance frameworks—ones that ensure AI is used responsibly, not recklessly.

What is the Future of Generative AI in Cybersecurity

What’s next for generative AI in cybersecurity? A lot. The field is evolving fast, and here are some of the biggest game-changers:

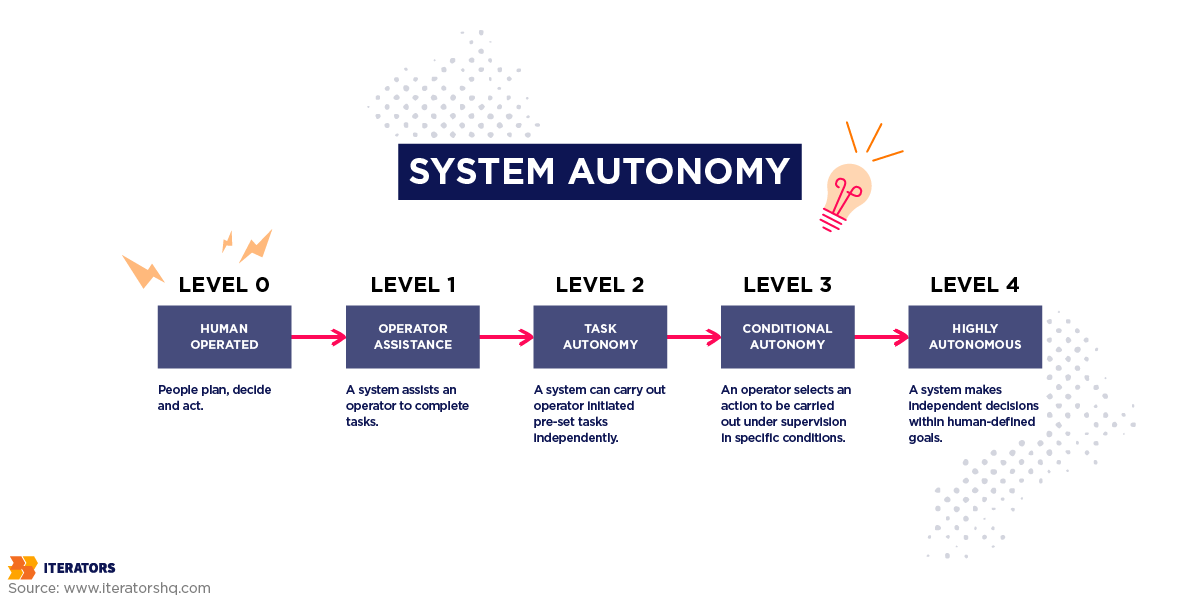

1. Autonomous Cyber Defense Systems

Right now, most AI security tools still need human oversight. But in the future AI-driven platforms will detect, analyze, and neutralize threats in real-time—without waiting for a security team to step in. These systems won’t just react; they’ll predict vulnerabilities before attackers exploit them. As they continuously learn from simulated cyberattacks and real-world incidents, they’ll refine their threat detection models and even share intelligence across global AI networks—creating a unified, proactive defense.

Some companies are already heading in this direction. Darktrace, a leading cybersecurity firm uses AI-powered threat detection and autonomous response capabilities. Darktrace’s Enterprise Immune System leverages machine learning to monitor networks, detect anomalies, and respond to security threats without human intervention.

2. AI vs. AI Cyber Battles

The future of cybersecurity won’t just be about stopping human hackers—it’ll be AI vs. AI. Hackers are already using generative AI to craft adaptive malware, deepfake phishing attacks, and AI-driven social engineering schemes. And it’s only getting worse. Projections suggest that by 2025, we could see 1.31 million AI-powered cyberattack complaints, racking up potential losses of $18.6 billion.

Fortunately, cybersecurity platforms aren’t sitting idly by. Organizations are rolling out cyber AI detection systems that will predict, detect, and neutralize threats before they cause damage. Think of it as an endless chess match where both sides—attackers and defenders—are constantly upgrading their algorithms to outsmart each other. Cybersecurity companies will simulate adversarial AI attacks to test and strengthen their systems. Additionally, AI will generate deceptive environments, tricking malicious AI into revealing tactics.

3. Quantum AI for Cryptography

Cybersecurity is about to get a quantum-powered upgrade. Right now, encryption relies on mathematical complexity. But once quantum computers hit their stride, they’ll tear through today’s encryption like a hot knife through butter. But quantum AI will usher in ultra-secure cryptography that even the most advanced supercomputers can’t crack.

Quantum cryptography will use principles like quantum entanglement and superposition to protect data. Generative AI will take it even further. Besides encrypting data, it will predict and counter vulnerabilities in real-time. IBM is already making moves with its Quantum Safe Cryptography project, while Google and Microsoft are pouring resources into post-quantum cryptography research.

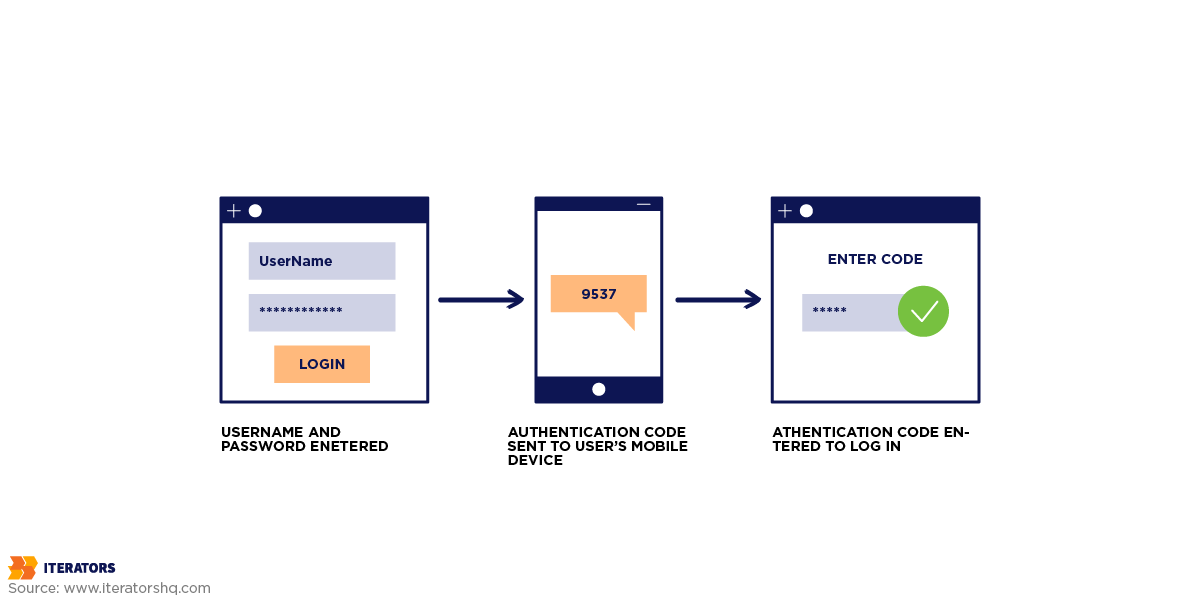

4. Personalized Security Protocols

Imagine a security system that knows you—your typing rhythm, the way you move your mouse, even how you hold your phone. Instead of relying on traditional web authentication, future cybersecurity will adapt in real-time to individual users. Personalized security protocols will analyze behavioral patterns to detect anomalies instantly.

One company leading the charge is BehavioSec. Their behavioral biometrics technology tracks subtle user interactions—like keystroke timing and touchscreen behavior—to build a unique security profile. If an unauthorized user tries to log in, even with the right credentials, they’ll stick out like a sore thumb.

5. AI-Augmented Security Operations Centers (SOCs)

Security Operations Centers (SOCs) are getting an AI upgrade. Traditional SOCs rely on human analysts to sift through endless alerts, often drowning in false positives. AI-augmented SOCs change the game. These smart-systems use machine learning to automate threat detection, analyze massive data streams, and prioritize real threats—cutting out the noise.

Take Cisco’s AI-native SOC as an example. It doesn’t just react to threats; it learns from them. Using behavioral analytics, it identifies anomalies in real time, adapting to new attack tactics before they escalate. What does this mean for security teams? Less burnout, faster responses, and stronger defenses.

6. More Regulations and Standards

Cyber threats are evolving, and so are the laws to fight them. Governments worldwide are stepping up. Expect stricter regulations, tougher compliance requirements, and more transparency mandates. Organizations will need to keep up—or face hefty penalties.

One major shift is cross-border regulations. Companies handling consumer data will need airtight security to comply with international laws. Post-quantum cryptography (PQC) standards will also emerge to stay ahead of quantum-powered cyber threats. Industries won’t wait for governments to act. They’ll create sector-specific security standards to protect critical infrastructure and supply chains.

Strengthen Your Cybersecurity with AI: Partner with Iterators HQ Today

Generative AI in cybersecurity is a game changer. It’s making security smarter, faster, and more adaptive. But here’s the catch: AI-driven cybersecurity isn’t just about benefits. It comes with challenges—false positives, integration issues, and the ever-present risk of AI-powered cyberattacks. That’s why businesses need to implement AI thoughtfully and responsibly.

If you want to stay ahead of emerging threats, partner with a reliable tech service provider like Iterators. Our experts help organizations build and integrate AI-powered security solutions tailored to their needs. From threat detection to real-time response, we ensure your digital infrastructure is fortified. Let us help you secure your digital infrastructure and stay ahead of emerging threats with cutting-edge solutions. Contact us today and ensure your business is protected by the best in AI-driven cybersecurity.